[GoogleML] Adam Optimizer

2023. 9. 20. 22:23ㆍArtificialIntelligence/2023GoogleMLBootcamp

Gradient Descent with Momentum

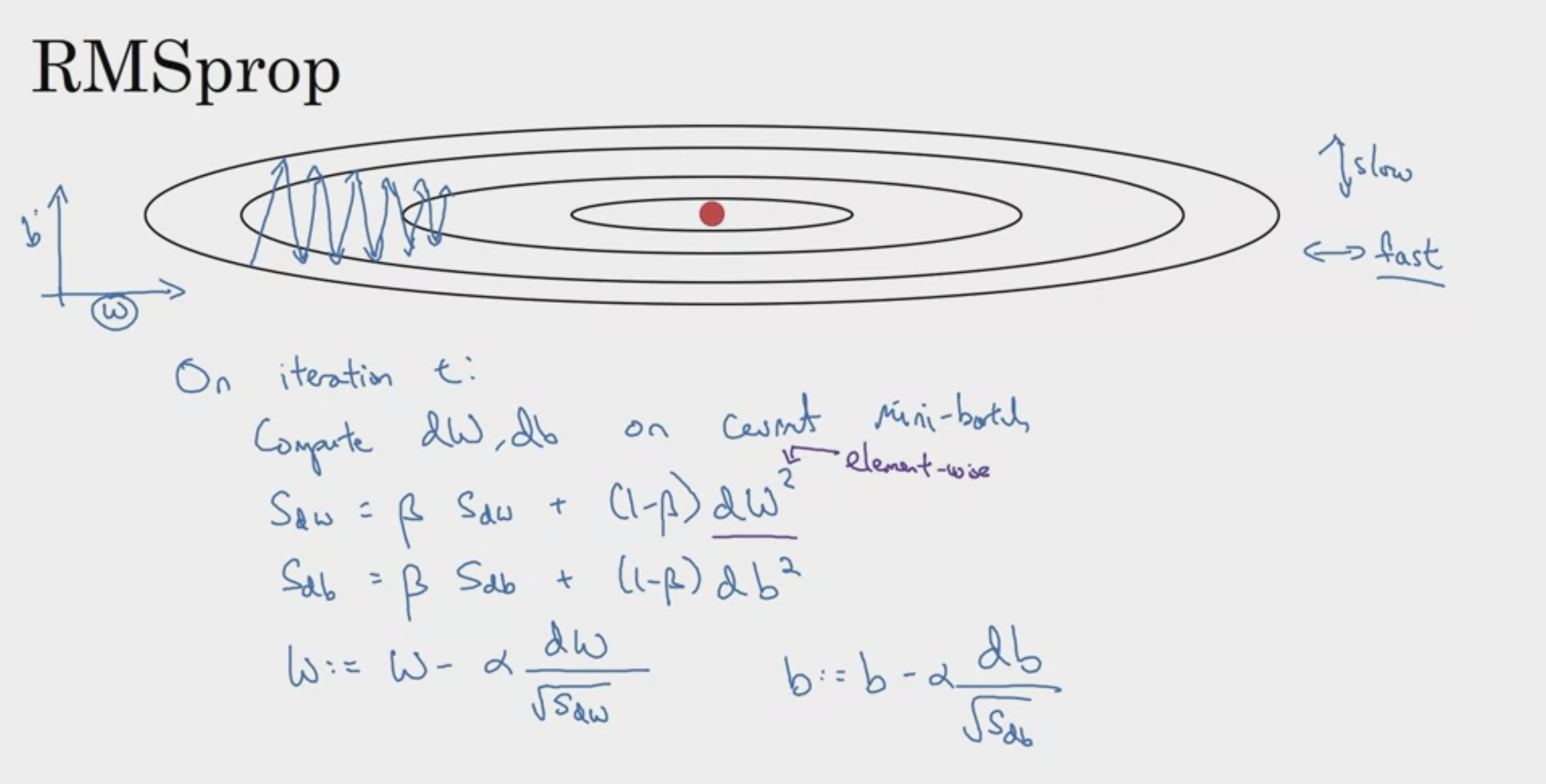

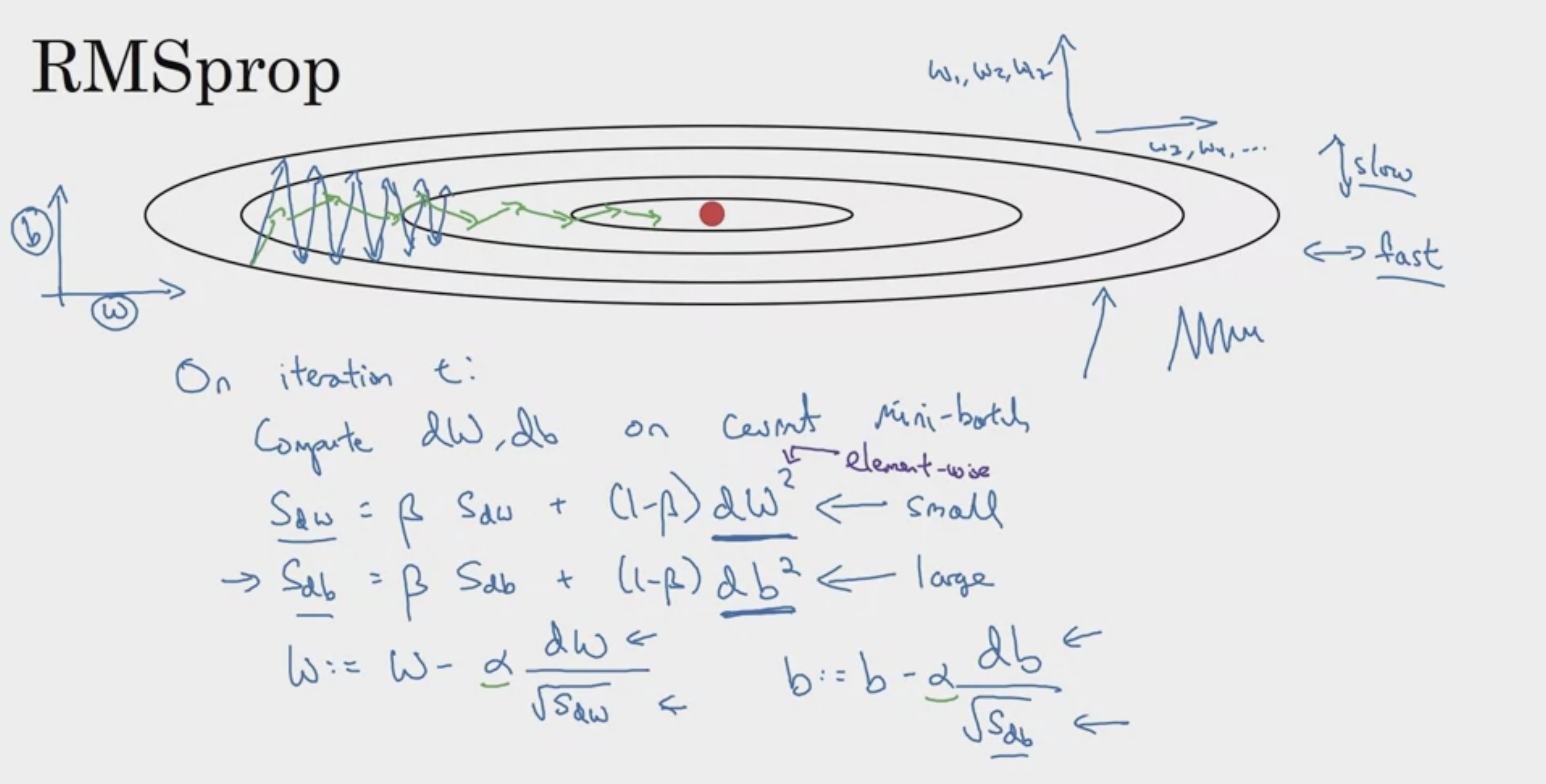

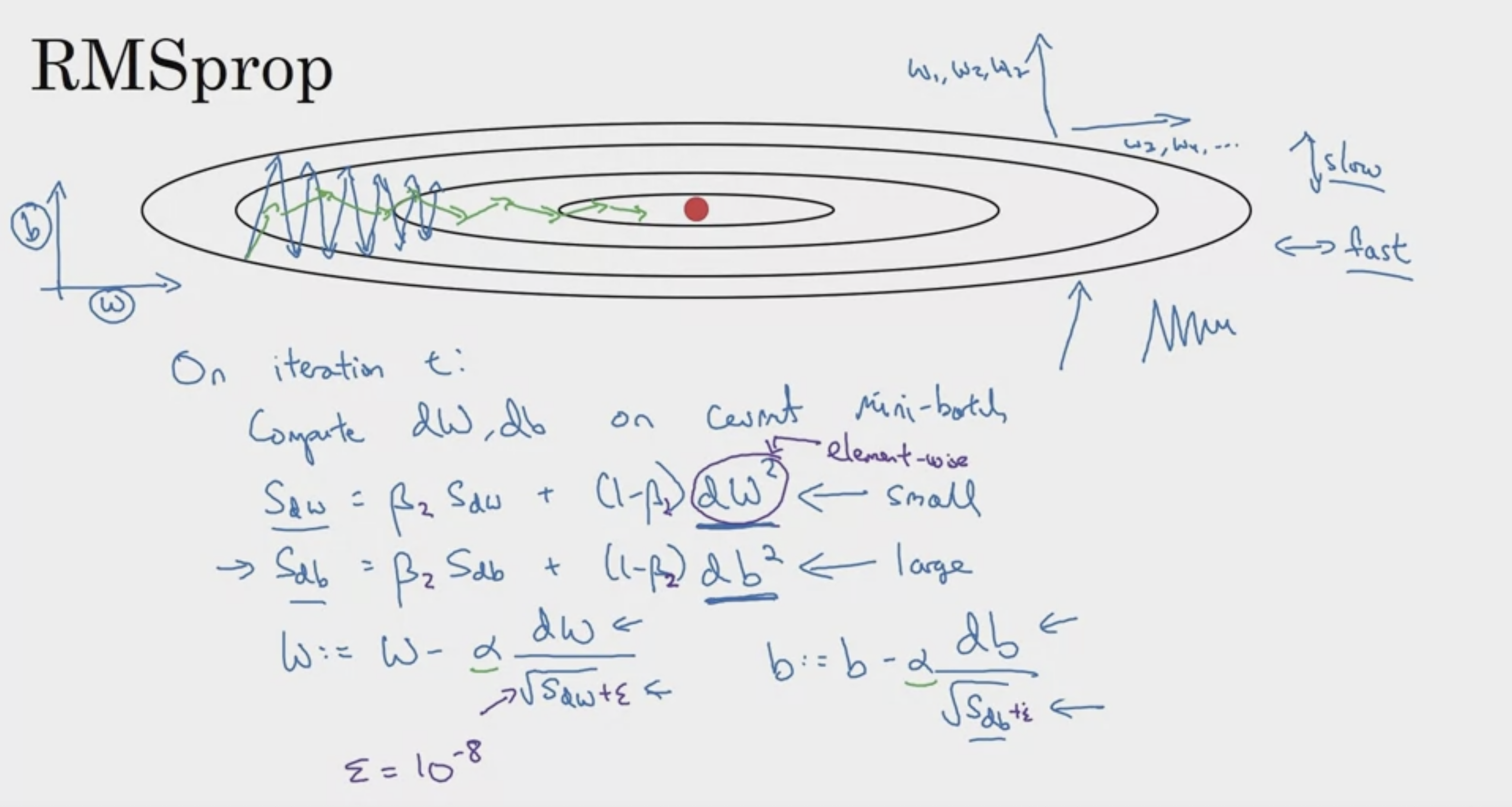

RMSprop

이것이 어떻게 동작할 수 있는가?

세로 방향으로는 적게,

가로 방향으로는 많이 update 되어야 한다! (많이 가야 한다)

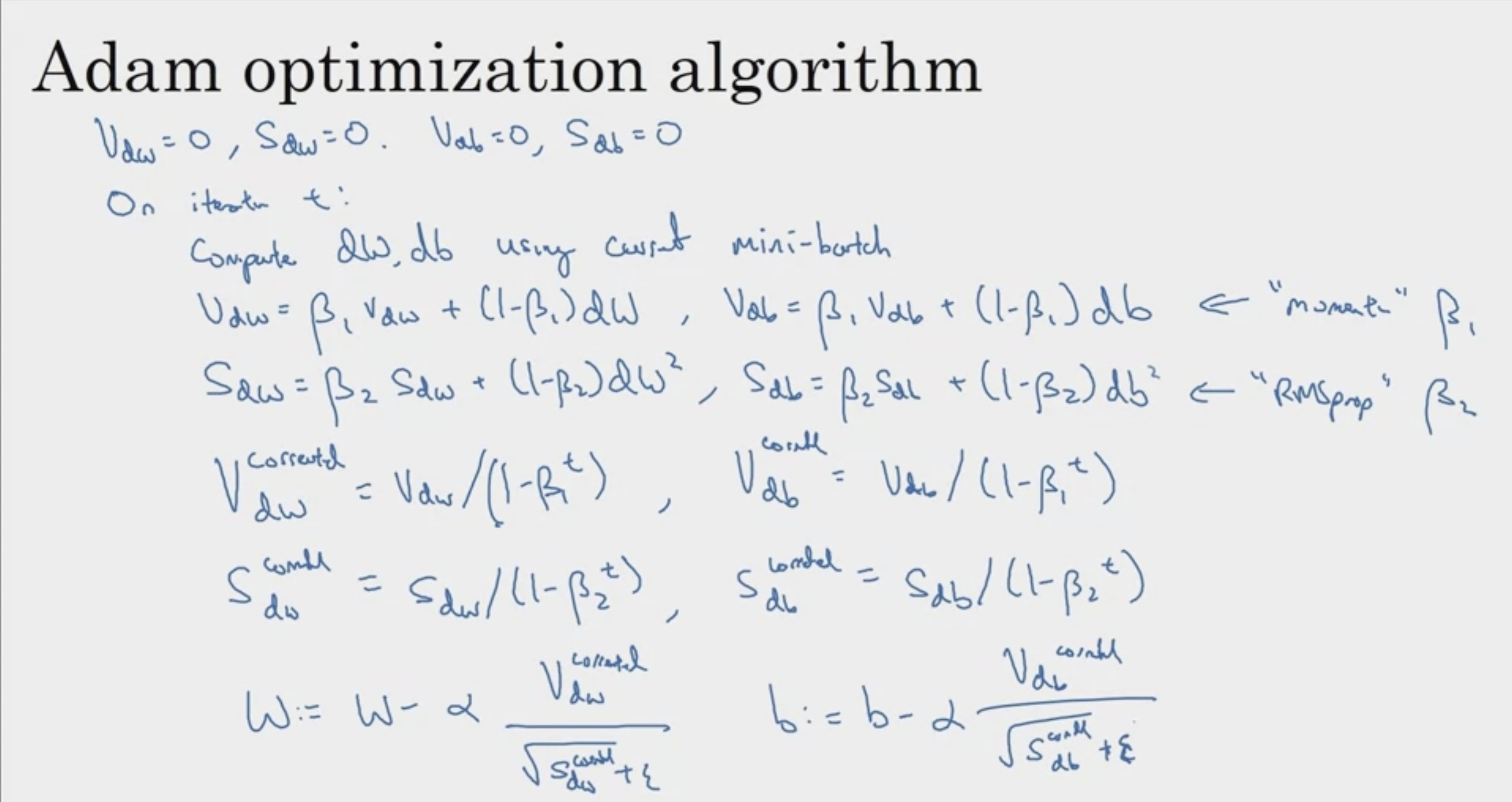

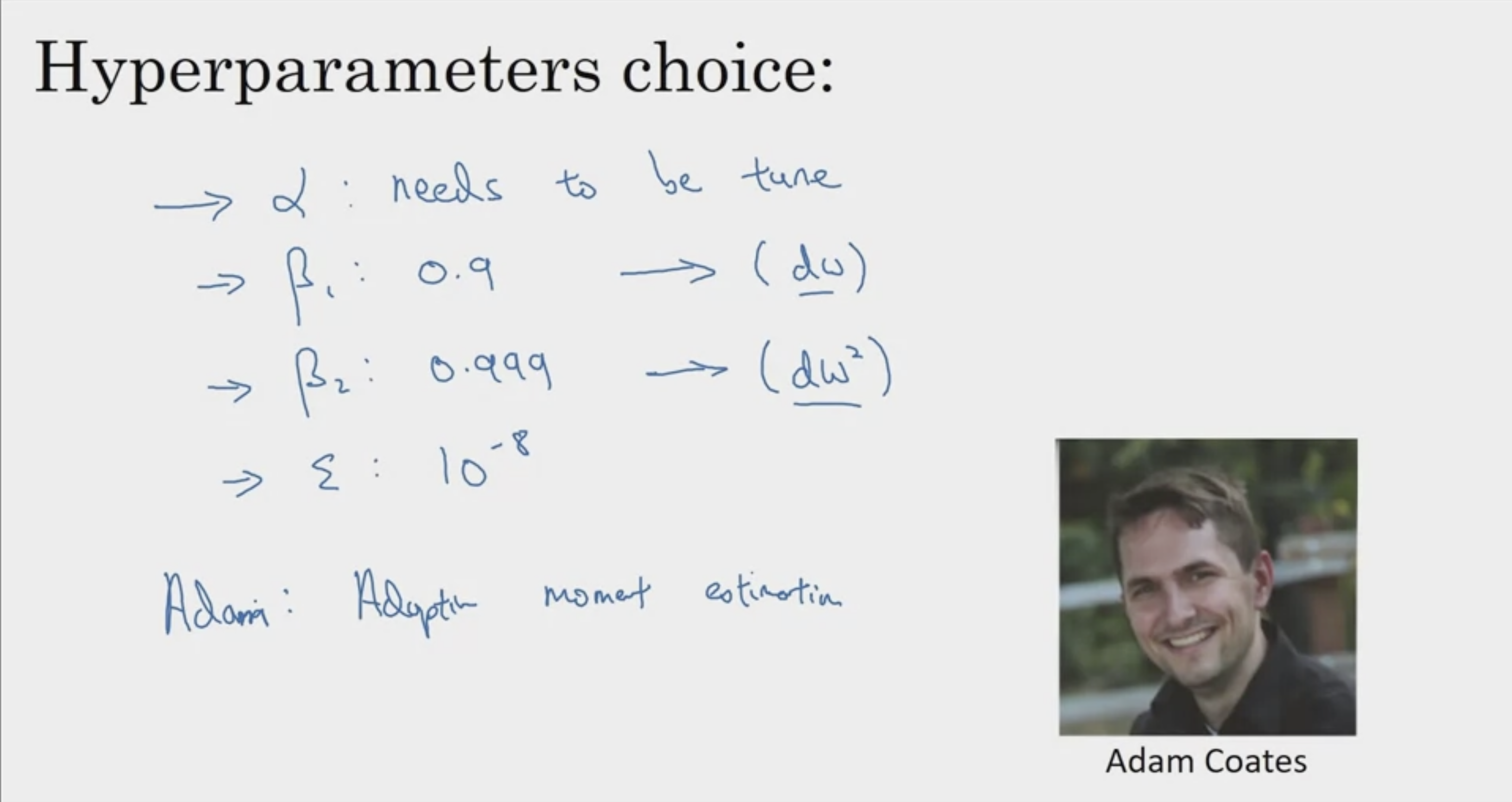

Adam Optimization Algorithm

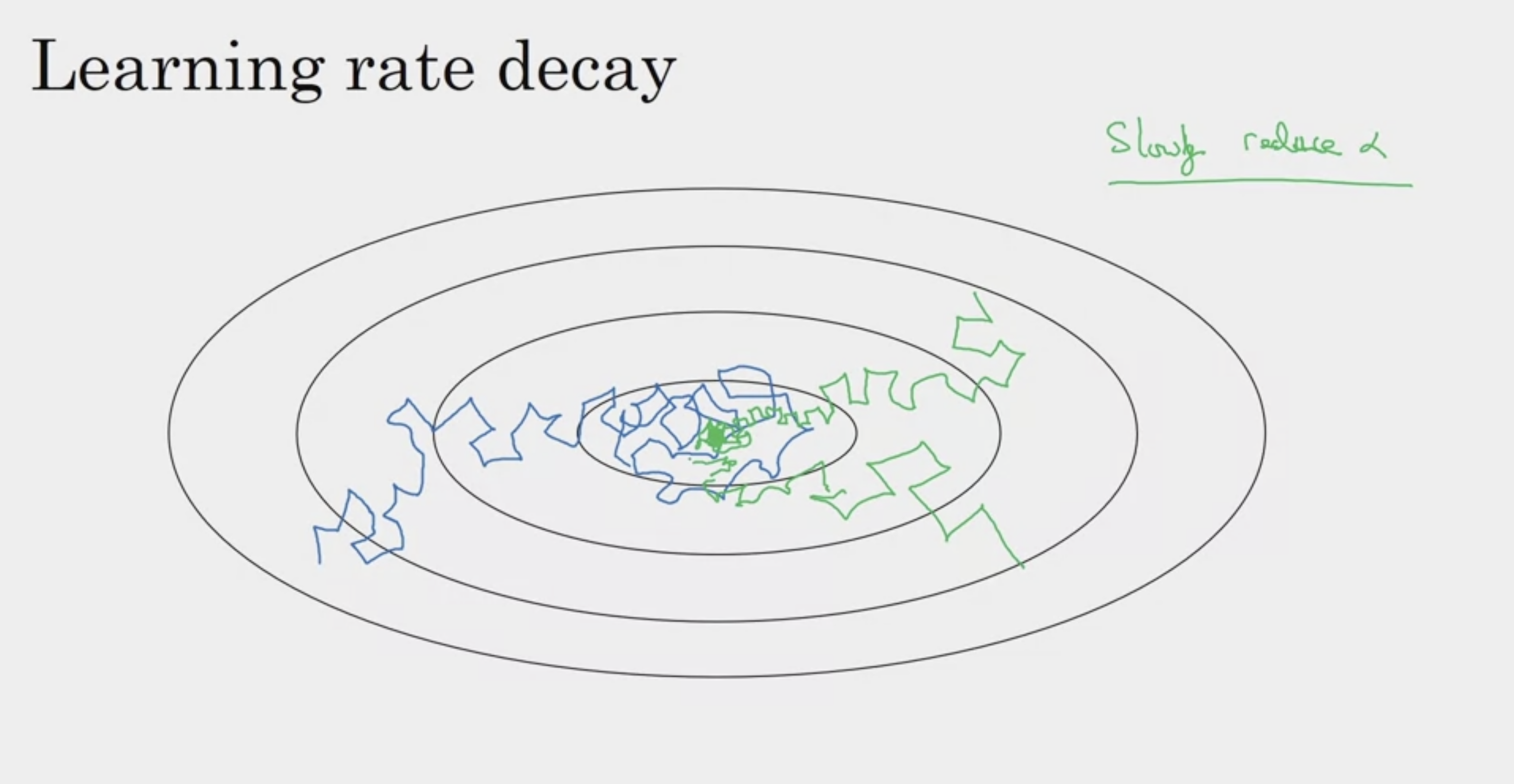

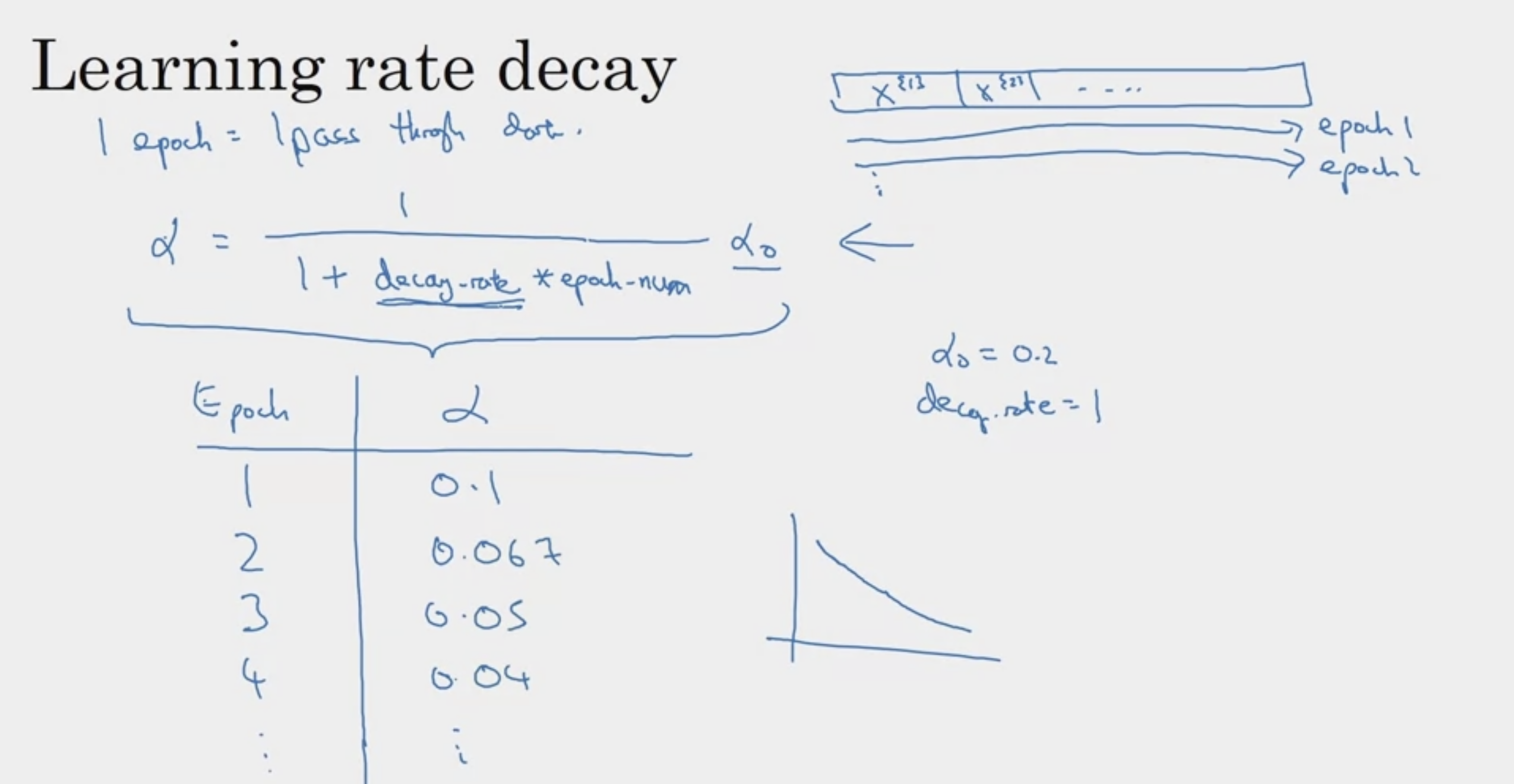

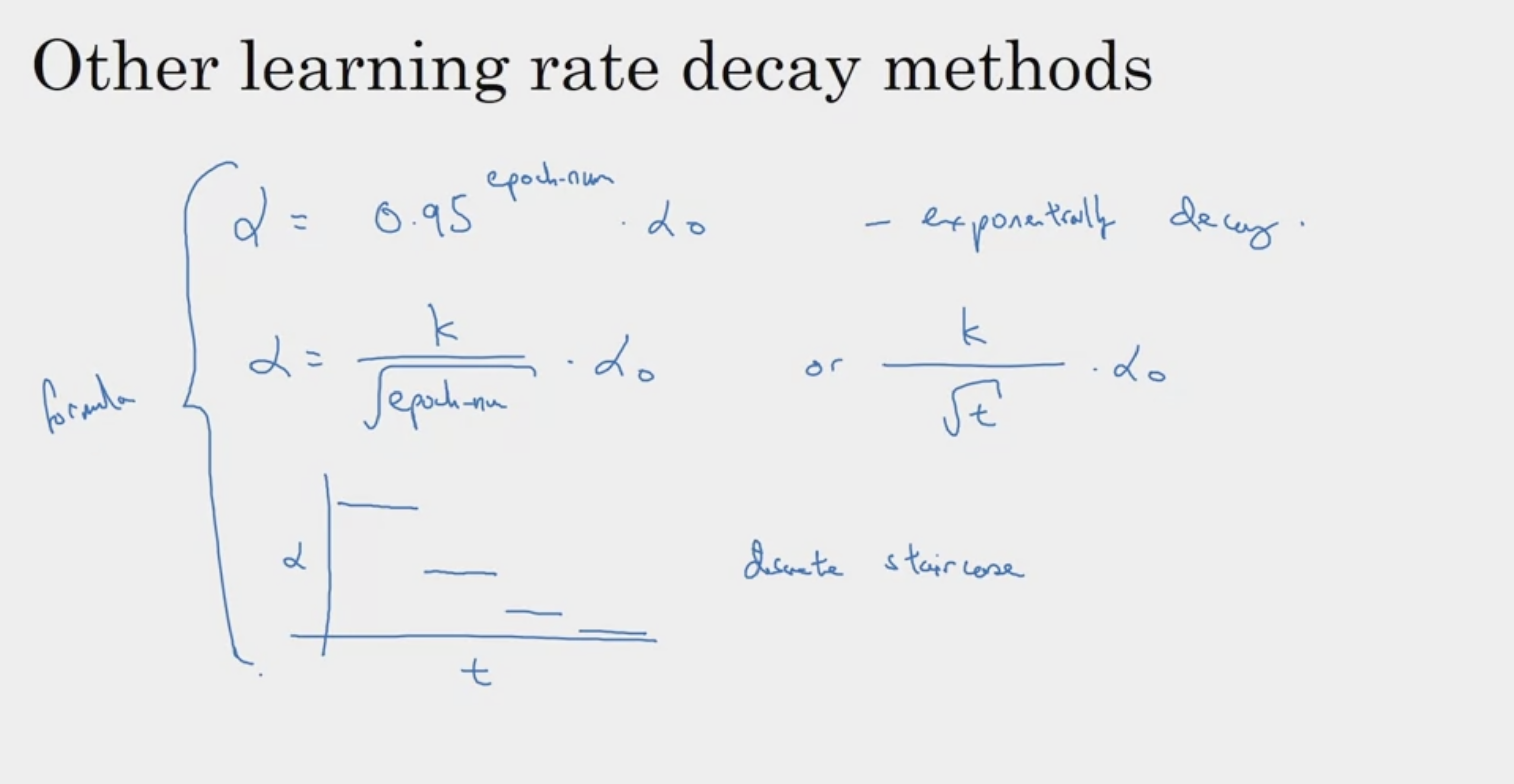

Learning Rate Decay

초반에는 크게 확 확

나중에는 learning rate 크기를 줄인다

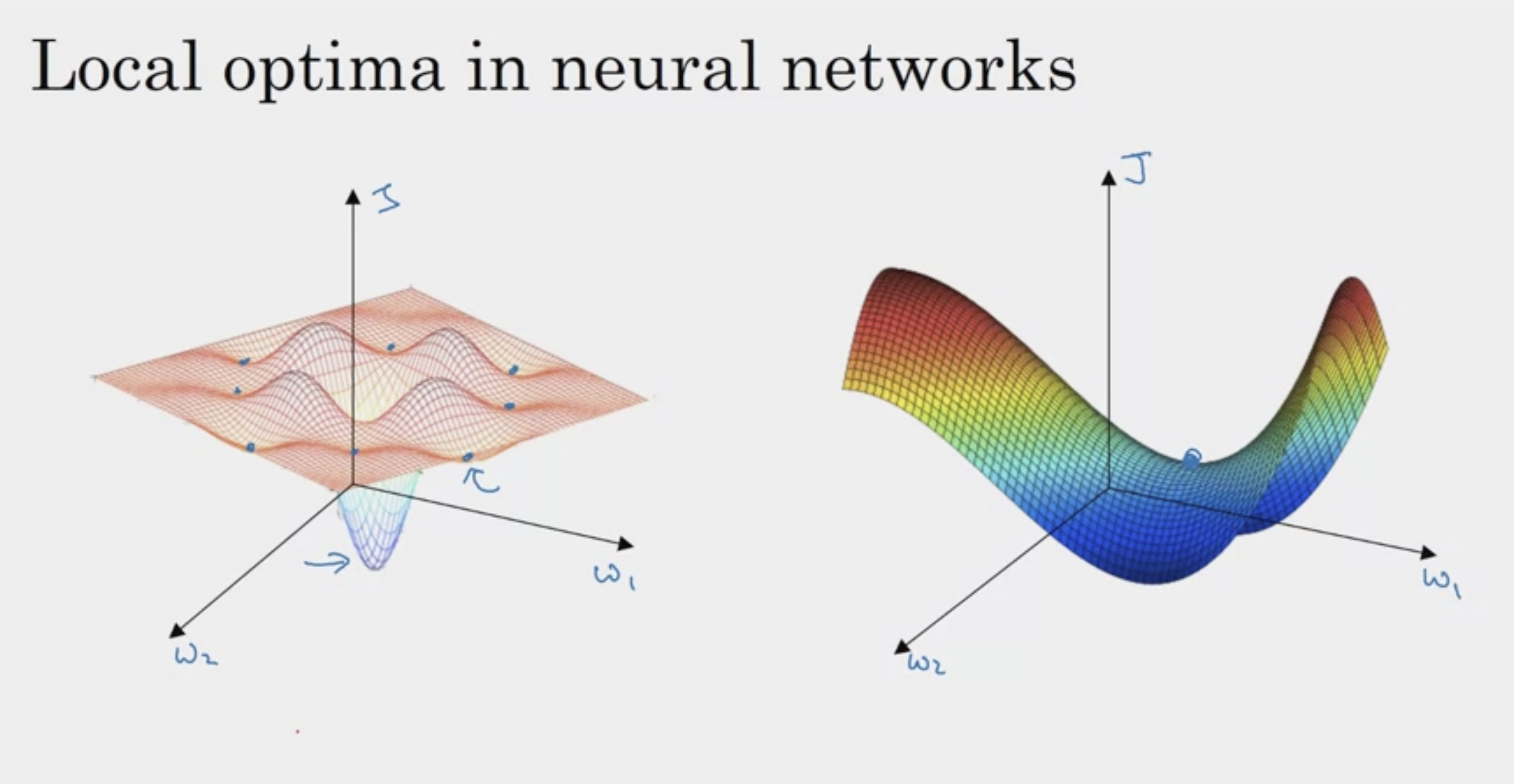

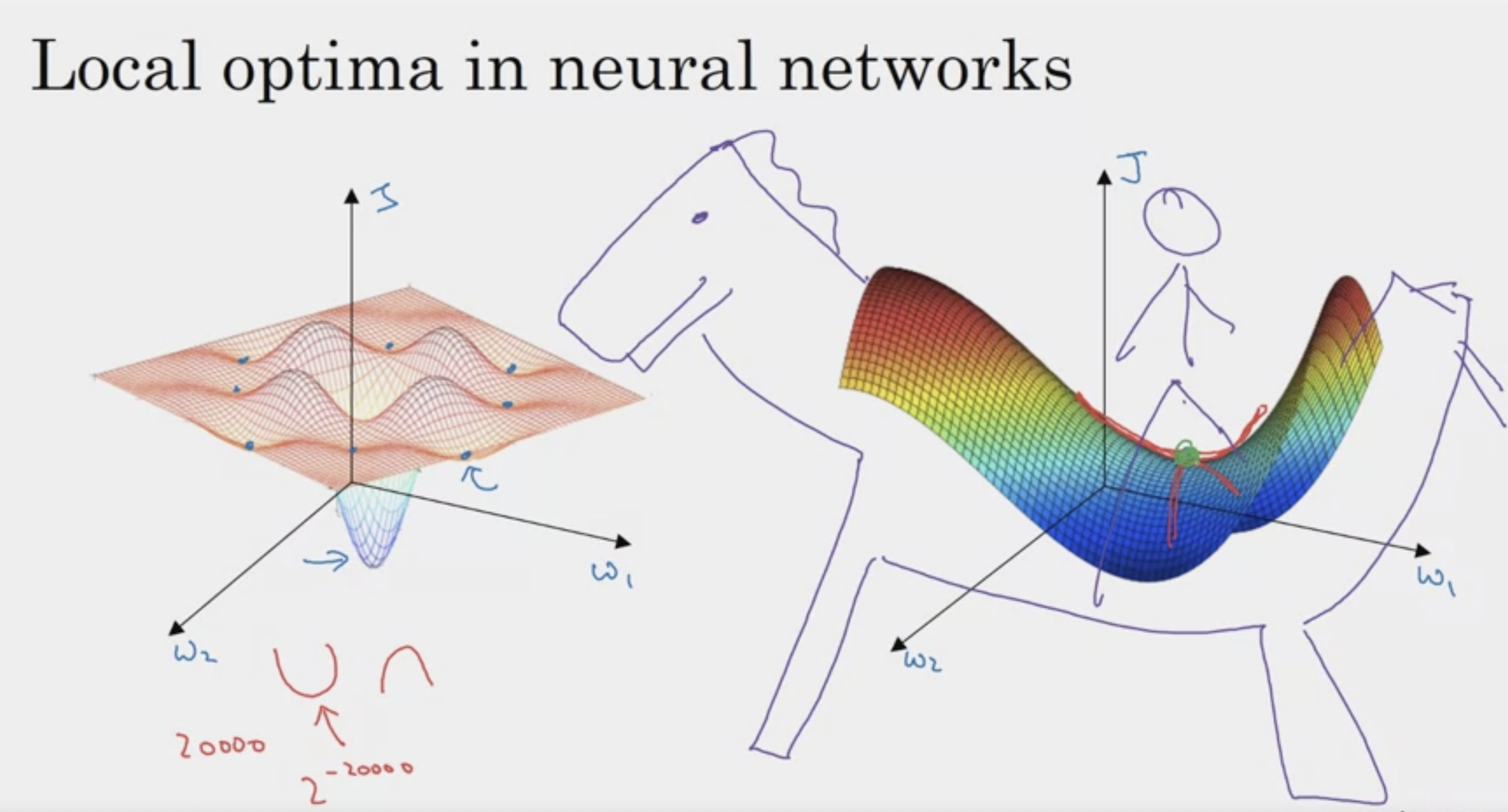

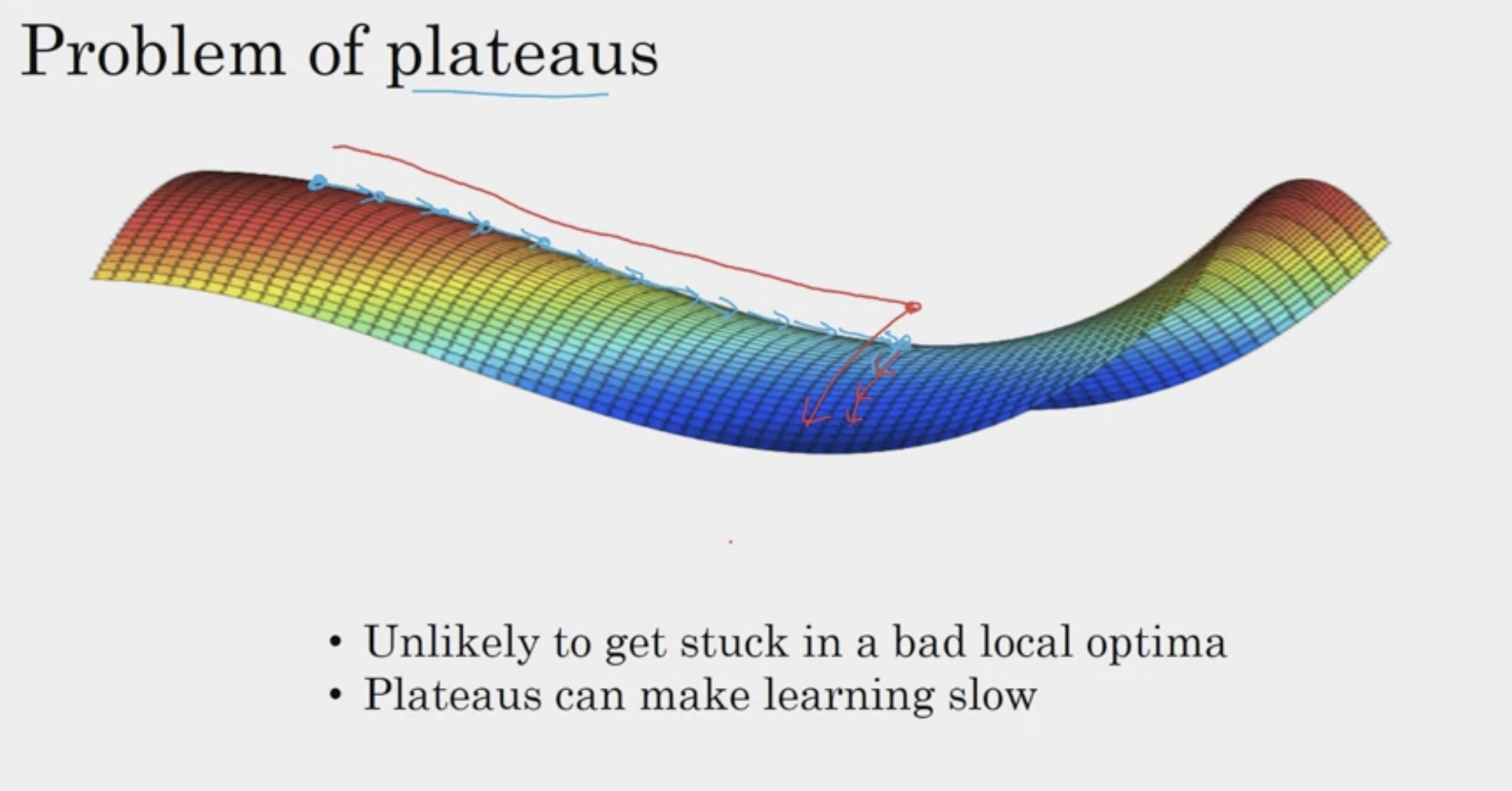

The Problem of Local Optima

오랫동안 기울기가 0에 가까운, plateau도 학습을 방해하는 요인이 된다

'ArtificialIntelligence > 2023GoogleMLBootcamp' 카테고리의 다른 글

| [GoogleML] Batch Normalization (0) | 2023.09.21 |

|---|---|

| [GoogleML] Hyperparameter Tuning (1) | 2023.09.20 |

| [GoogleML] Optimization Algorithms (0) | 2023.09.20 |

| [GoogleML] Optimization Problem (0) | 2023.09.13 |

| [GoogleML] Regularizing Neural Network (0) | 2023.09.12 |