2023. 9. 29. 21:57ㆍArtificialIntelligence/2023GoogleMLBootcamp

Orthogonalization

이게 어떻게 ML과 연관이 있는가?

Single Number Evaluation Metric

precision (정밀도)

: cat으로 판별된 것 중 실제 cat의 비율

recall (재현율)

: 실제 cat 중 제대로 분류된 것의 비율

A와 B중 어떤 분류기가 더 좋은가?

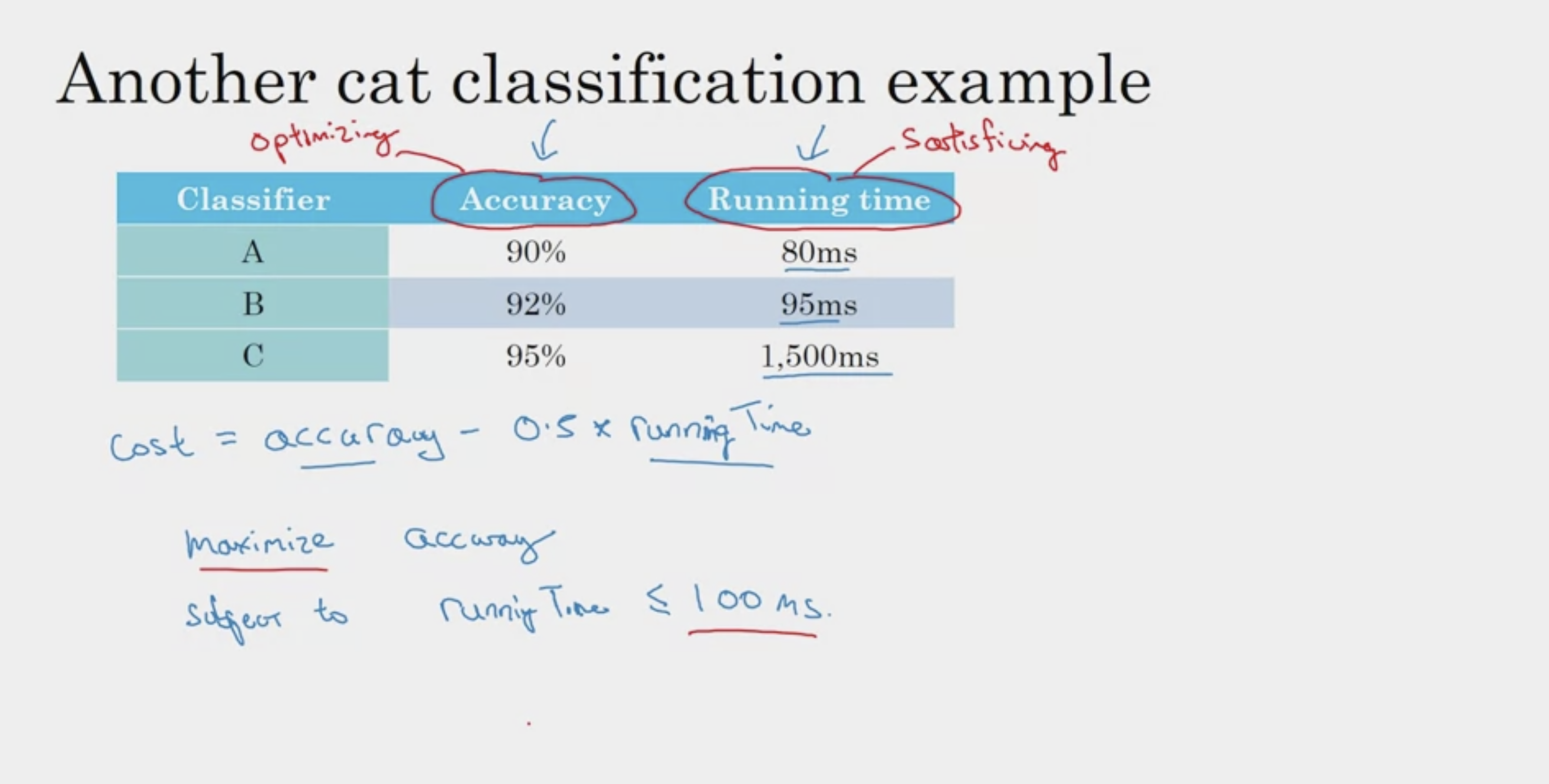

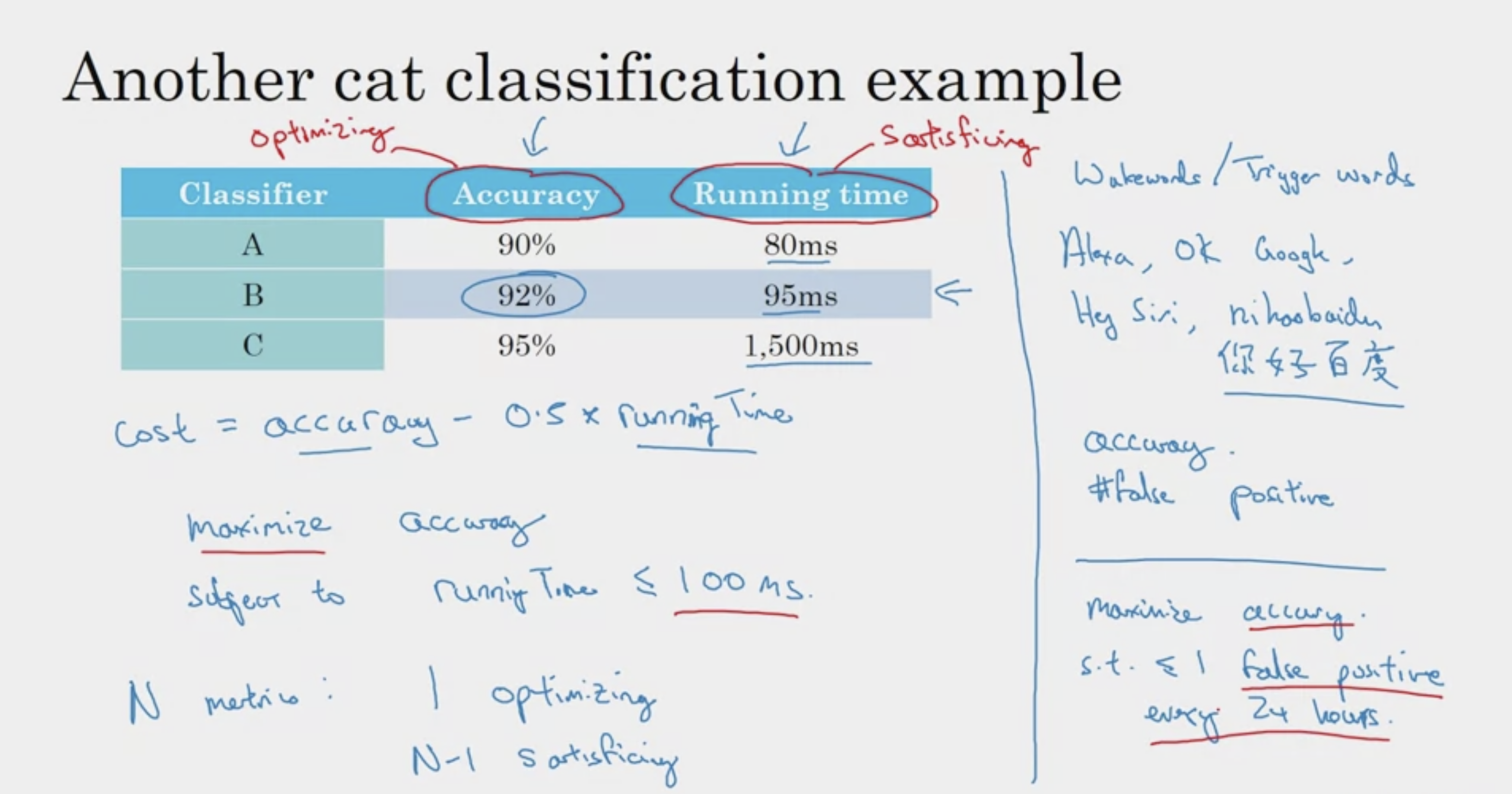

Satisficing and Optimizing Metric

cost func에 다양한 의도를 담을 수 있다

accuracy - 높을수록 좋음 (optimize)

running time - 100ms 이하이기만 하면 됨 (satisfied)

+ 음성 신호의 100ms를 의미!

Train/Dev/Test Distributions

두 set이 같은 분포를 가질 수 있도록

randomly sampling

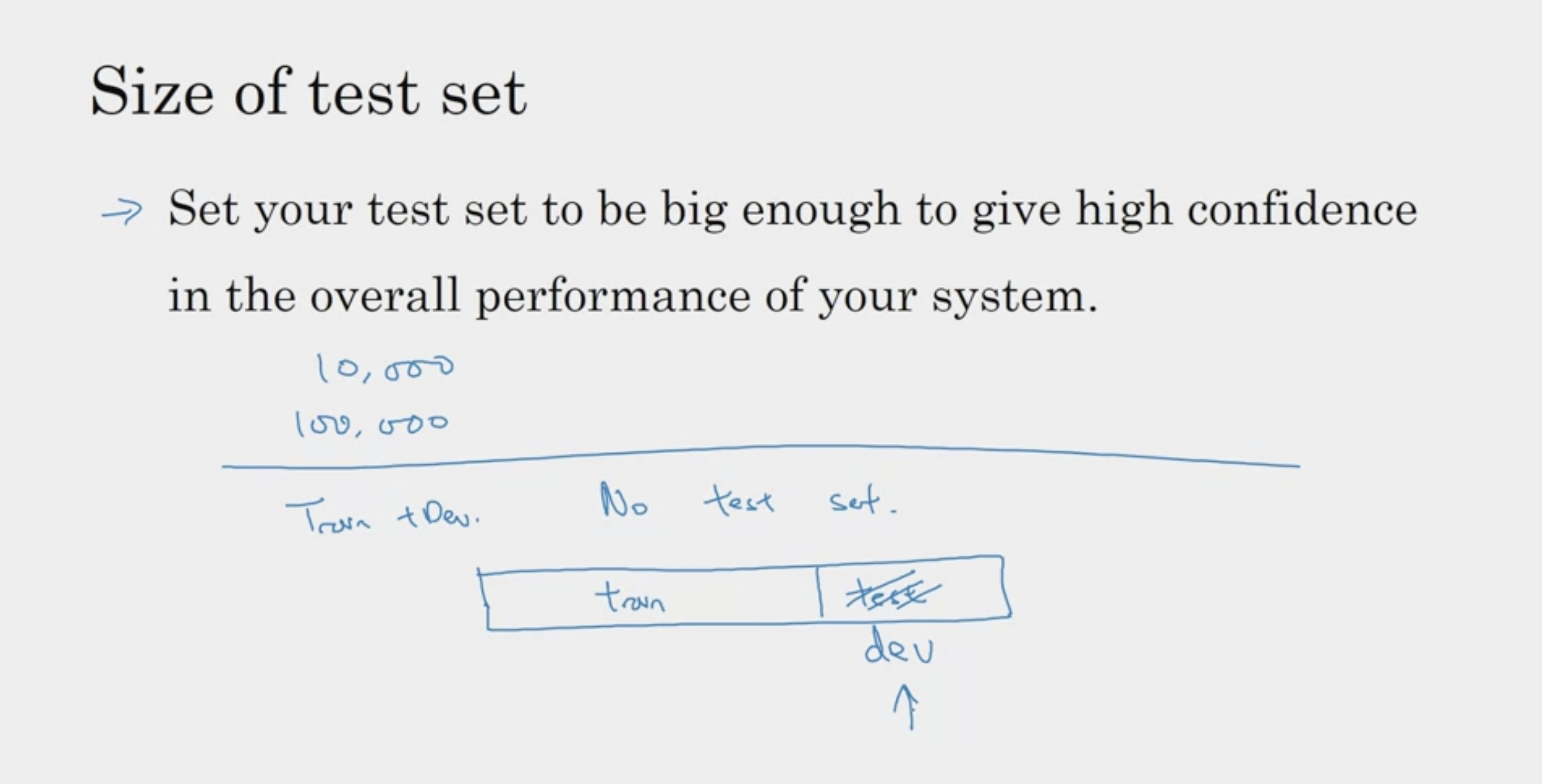

Size of the Dev and Test Sets

하지만 현대는 X

When to Change Dev/Test Sets and Metrics?

새로운 평가 지표를 찾아보아야 한다. evaluation metric

원래 error metric에 만족하지 못한다면, 다른 메트릭을 생각해보아라

1. 타겟을 설정하고 (목표를 정하고)

2. 그 타겟을 잘 맞추도록, 메트릭에 맞춘다

-> error metric은 상황에 따라 바뀔 수도 있는 것!

고화질의 사진으로 학습시켰을 때, A가 더 좋은 알고리즘

그러나 유저의 사진은 흐린 화질 -> B가 더 좋은 알고리즘이 될 수 있다

이런 상황에서는 metric을 바꾸자

Why Human-level Performance?

베이즈 에러

x -> y mapping에서 최적의 에러

(더 이상 좋은 값은 X)

Avoidable Bias

- 사람과 차이가 많이 날 때 -> 모델 학습 성능을 더 올리기 위해 노력 (bias 줄이기)

- 사람과 차이가 거의 없을 때 -> 모델 학습 성능을 검증 데이터 (valid, dev set)과 맞추기 위해 노력 (오버피팅 관점, variance 줄이기)

Understanding Human-level Performance

베이즈 에러를 생각해보면 답이 나온다

bayes error == human level error (0.5%)

이전까지 0과 비교하는 bias 대신

bayes error와 비교하는 avoidable bias (보다 현실적인 비교)

이것이 human level error의 의의 (bayes error의 approximation)

Surpassing Human-level Performance

여기서 avoidable bias는? (베이즈를 정의하라)

머신러닝 알고리즘이 human을 넘어선 경우 -> avoidable bias를 정의하기 어렵다

사람보다 ML 성능이 높은 경우

- structural data

- not natural perception (사람에게 용이한 자연스러운 인지가 X)

- lots of data (사람이 한번에 파악하기 힘든 많은 데이터)

Improving your Model Performance

이전과 유사하긴 하다

- model 성능을 더 끌어올린다

vs 오버피팅 방지

'ArtificialIntelligence > 2023GoogleMLBootcamp' 카테고리의 다른 글

| [GoogleML] Transfer Learning & End-to-end Deep Learning (0) | 2023.09.30 |

|---|---|

| [GoogleML] Error Analysis & Mismatched Train and Test Set (0) | 2023.09.30 |

| [GoogleML] Multi-class Classification (0) | 2023.09.23 |

| [GoogleML] Hyperparameter Tuning, Regularization and Optimization 수료 (0) | 2023.09.22 |

| [GoogleML] Batch Normalization (0) | 2023.09.21 |