2024. 5. 17. 20:20ㆍComputerScience/ProcessingInMemory

2024. 05. 17. Friday

Benchmarking a New Paradigm: An Experimental Analysis of a Real Processing-in-Memory Architecture

Benchmarking a New Paradigm: Experimental Analysis and Characterization of a Real Processing-in-Memory System

Many modern workloads, such as neural networks, databases, and graph processing, are fundamentally memory-bound. For such workloads, the data movement between main memory and CPU cores imposes a significant overhead in terms of both latency and energy. A m

ieeexplore.ieee.org

Abstract

- Many modern workloads, such as neural networks, databases, and graph processing, are fundamentally memory-bound. For such workloads, the data movement between main memory and CPU cores imposes a significant overhead in terms of both latency and energy. A major reason is that this communication happens through a narrow bus with high latency and limited bandwidth, and the low data reuse in memory-bound workloads is insufficient to amortize the cost of main memory access. Fundamentally addressing this data movement bottleneck requires a paradigm where the memory system assumes an active role in computing by integrating processing capabilities. This paradigm is known as processing-in-memory (PIM).

- Recent research explores different forms of PIM architectures, motivated by the emergence of new 3D-stacked memory technologies that integrate memory with a logic layer where processing elements can be easily placed. Past works evaluate these architectures in simulation or, at best, with simplified hardware prototypes.

- In contrast, the UPMEM company has designed and manufactured the first publicly-available real-world PIM architecture. The UPMEM PIM architecture combines traditional DRAM memory arrays with general-purpose in-order cores, called DRAM Processing Units (DPUs), integrated in the same chip.

- This paper provides the first comprehensive analysis of the first publicly-available real-world PIM architecture. We make two key contributions.

- First, we conduct an experimental characterization of the UPMEM-based PIM system using microbenchmarks to assess various architecture limits such as compute throughput and memory bandwidth, yielding new insights.

- Second, we present PrIM(Processing-In-Memory benchmarks), a benchmark suite of 16 workloads from different application domains (e.g., dense/sparse linear algebra, databases, data analytics, graph processing, neural networks, bioinformatics, image processing), which we identify as memory-bound. We evaluate the performance and scaling characteristics of PrIM benchmarks on the UPMEM PIM architecture, and compare their performance and energy consumption to their state-of-the-art CPU and GPU counterparts.

- Our extensive evaluation conducted on two real UPMEM-based PIM systems with 640 and 2,556 DPUs provides new insights about suitability of different workloads to the PIM system, programming recommendations for software designers, and suggestions and hints for hardware and architecture designers of future PIM systems.

Introduction

- In modern computing systems, a large fraction of the execution time and energy consumption of modern data-intensive workloads is spent moving data between memory and processor cores. This data movement bottleneck stems from the fact that, for decades, the performance of processor cores has been increasing at a faster rate than the memory performance. The gap between an arithmetic operation and a memory access in terms of latency and energy keeps widening and the memory access is becoming increasingly more expensive.

- As a result, recent experimental studies report that data movement accounts for 62%, 40%, and 35% of the total system energy in various consumer, scientific, and mobile applications, respectively.

- One promising way to alleviate the data movement bottleneck is processing-in-memory (PIM), which equips memory chips with processing capabilities. This paradigm has been explored for more than 50 years, but limitations in memory technology prevented commercial hardware from successfully materializing. More recently, difficulties in DRAM scaling (i.e., challenges in increasing density and performance while maintaining reliability, latency and energy consumption) have motivated innovations such as 3D-stacked memory and nonvolatile memory which present new opportunities to redesign the memory subsystem while integrating processing capabilities.

- 3D-stacked memory integrates DRAM layers with a logic layer, which can embed processing elements. Several works explore this approach, called processing-near-memory (PNM), to implement different types of processing components in the logic layer, such as general-purpose cores, application-specific accelerators, simple functional units, GPU cores, or reconfigurable logic.

- However, 3D-stacked memory suffers from high cost and limited capacity, and the logic layer has hardware area and thermal dissipation constraints, which limit the capabilities of the embedded processing components.

- On the other hand, processing-using-memory (PUM) takes advantage of the analog operational properties of memory cells in SRAM, DRAM, or nonvolatile memory to perform specific types of operations efficiently.

- However, processing-using-memory is either limited to simple bitwise operations (e.g., majority, AND, OR) [21, 25, 26, 188, 191], requires high area overheads to perform more complex operations [35, 36, 99], or requires significant changes to data organization, manipulation, and handling mechanisms to enable bit-serial computation, while still having limitations on certain operations.

- Moreover, processing-using- memory approaches are usually efficient mainly for regular computations, since they naturally operate on a large number of memory cells (e.g., entire rows across many subarrays) simultaneously.

- For these reasons, complete PIM systems based on 3D-stacked memory or processing-using-memory have not yet been commercialized in real hardware.

- The UPMEM PIM architecture is the first PIM system to be commercialized in real hardware. To avoid the aforementioned limitations, it uses conventional 2D DRAM arrays and combines them with general-purpose processing cores, called DRAM Processing Units (DPUs), on the same chip. Combining memory and processing components on the same chip imposes serious design challenges.

- For example, DRAM designs use only three metal layers, while conventional processor designs typically use more than ten . While these challenges prevent the fabrication of fast logic transistors, UPMEM overcomes these challenges via DPU cores that are relatively deeply pipelined and fine- grained multithreaded to run at several hundred mega-hertz.

- The UPMEM PIM architecture provides several key advantages with respect to other PIM proposals.

- First, it relies on mature 2D DRAM design and fabrication process, avoiding the drawbacks of emerging 3D-stacked memory technology.

- Second, the general-purpose DPUs support a wide variety of computations and data types, similar to simple modern general-purpose processors.

- Third, the architecture is suitable for irregular computations because the threads in a DPU can execute independently of each other (i.e., they are not bound by lockstep execution as in SIMD ).

- Fourth, UPMEM provides a complete software stack that enables DPU programs to be written in the commonly-used C language.

- Rigorously understanding the UPMEM PIM architecture, the first publicly-available PIM architecture, and its suitability to various workloads can provide valuable insights to programmers, users and architects of this architecture as well as of future PIM systems. To this end, our work provides the first comprehensive experimental characterization and analysis of the first publicly-available real-world PIM architecture. To enable our experimental studies and analyses, we develop new microbenchmarks and a new benchmark suite, which we openly and freely make available.

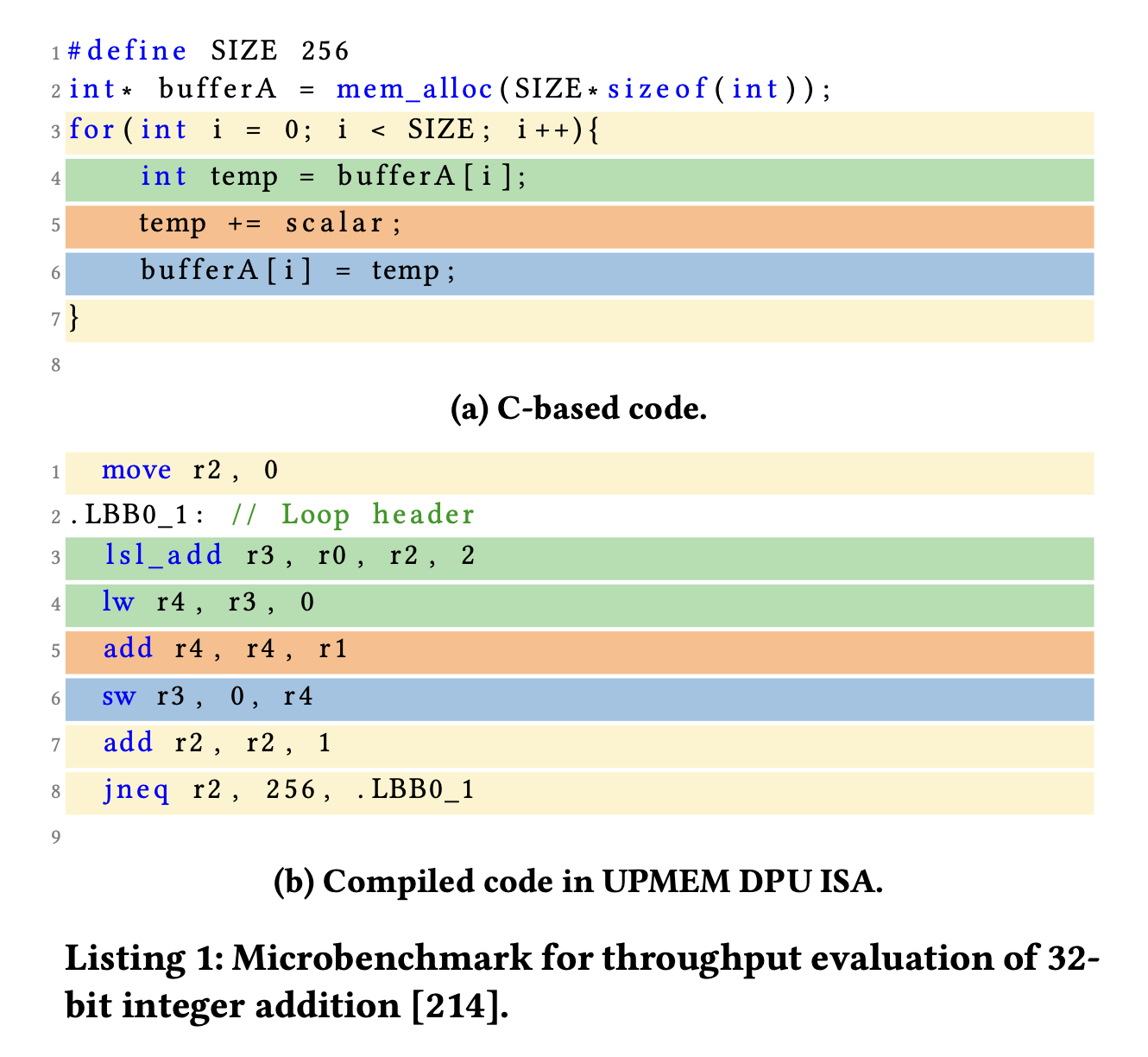

- We develop a set of microbenchmarks to evaluate, characterize, and understand the limits of the UPMEM-based PIM system, yielding new insights.

- First, we obtain the compute throughput of a DPU for different arithmetic operations and data types.

- Second, we measure the bandwidth of two different memory spaces that a DPU can directly access using load/store instructions:

- (1) a DRAM bank called Main RAM (MRAM)

- (2) an SRAM-based scratch-pad memory called Working RAM (WRAM).

- We employ streaming (i.e., unit-stride), strided, and random memory access patterns to measure the sustained bandwidth of both types of memories.

- Third, we measure the sustained bandwidth between the standard main memory and the MRAM banks for different types and sizes of transfers, which is important for the communication of the DPU with the host CPU and other DPUs.

- We present PrIM (Processing-In-Memory benchmarks), the first benchmark suite for a real PIM architecture. PrIM includes 16 workloads from different application domains (e.g., dense/sparse linear al- gebra, databases, data analytics, graph processing, neural networks, bioinformatics, image processing), which we identify as memory- bound using the roofline model for a conventional CPU.

- We perform strong scaling and weak scaling experiments with the 16 benchmarks on a system with 2,556 DPUs, and compare their performance and energy consumption to their modern CPU and GPU counterparts. Our extensive evaluation provides new insights about suitability of different workloads to the PIM system, programming recommendations for software designers, and suggestions and hints for hardware and architecture designers of future PIM systems. All our microbenchmarks and PrIM benchmarks are publicly and freely available to serve as programming samples for real PIM architectures, evaluate and compare current and future PIM systems, and help further advance PIM architecture, programming, and software research.

- The main contributions of this work are as follows

- We perform the first comprehensive characterization and analysis of the first publicly-available real-world PIM architecture. We analyze the new architecture’s potential, limitations and bottlenecks. We analyze (1) memory bandwidth at different levels of the DPU memory hierarchy for different memory access patterns, (2) DPU compute throughput of different arithmetic operations for different data types, and (3) strong and weak scaling characteristics for different computation patterns.

- We find that (1) the UPMEM PIM architecture is fundamentally compute bound, since workloads with more complex operations than integer addition fully utilize the instruction pipeline before they can potentially saturate the memory bandwidth, and (2) workloads that require inter-DPU communication do not scale well, since there is no direct communication channel among DPUs, and therefore, all inter-DPU communication takes place via the host CPU, i.e., through the narrow memory bus.

- We present and open-source PrIM, the first benchmark suite for a real PIM architecture, composed of 16 real-world workloads that are memory-bound on conventional processor-centric systems. The workloads have different characteristics, exhibiting heterogeneity in their memory access patterns, operations and data types, and communication patterns. The PrIM benchmark suite provides a common set of workloads to evaluate the UPMEM PIM architecture with and can be useful for programming, architecture and systems researchers all alike to improve multiple aspects of future PIM hardware and software.

- We compare the performance and energy consumption of PrIM benchmarks on two UPMEM-based PIM systems with 2,556 DPUs and 640 DPUs to modern conventional processor-centric systems, i.e., CPUs and GPUs. Our analysis reveals several new and interesting findings. We highlight three major findings.

- First, both UPMEM-based PIM systems outperform a modern CPU (by 93.0× and 27.9×, on average, respectively) for 13 of the PrIM benchmarks, which do not require intensive inter-DPU synchronization or floating point operations. Section 5.2 provides a detailed analysis of our comparison of PIM systems to modern CPU and GPU.

- Second, the 2,556-DPU PIM system is faster than a modern GPU (by 2.54×, on average) for 10 PrIM benchmarks with (1) streaming memory accesses, (2) little or no inter-DPU synchronization, and (3) little or no use of complex arithmetic operations (i.e., integer multiplication/division, floating point operations).

- Third, energy consumption comparison of the PIM, CPU, and GPU systems follows the same trends as the performance comparison: the PIM system yields large energy savings over the CPU and the CPU, for workloads where it largely outperforms the CPU and the GPU.

- We are comparing the first ever commercial PIM system to CPU and GPU systems that have been heavily optimized for decades in terms of architecture, software, and manufacturing. Even then, we see significant advantages of PIM over CPU and GPU in most PrIM benchmarks (Section 5.2).

- We believe the architecture, soft- ware, and manufacturing of PIM systems will continue to improve (e.g., we suggest optimizations and areas for future improvement in Section 6). As such, more fair comparisons to CPU and GPU systems would be possible and can reveal higher benefits for PIM systems in the future.

GENERAL PROGRAMMING RECOMMENDATIONS

1. Execute on the DRAM Processing Units (DPUs) portions of parallel code that are as long as possible.

2. Split the workload into independent data blocks, which the DPUs operate on independently.

3. Use as many working DPUs in the system as possible.

4. Launch at least 11 tasklets (i.e., software threads) per DPU.

Key Observations 1

The arithmetic throughput of a DRAM Processing Unit saturates at 11 or more tasklets.

This observation is consistent for different data types (INT32, INT64, UINT32, UINT64, FLOAT, DOUBLE) and operations (ADD, SUB, MUL, DIV).

Key Observations 2

DRAM Processing Units (DPUs) provide native hardware support for 32- and 64-bit integer addition and subtraction, leading to high throughput for these operations. DPUs do not natively support 32 and 64-bit multiplication and division, and floating point operations. These operations are emulated by the UPMEM runtime library, leading to much lower throughput.

Key Observations 3

The sustained bandwidth provided by the DRAM Processing Unit’s internal Working memory (WRAM) is independent of the memory access pattern (either streaming, strided, or random access pattern). All 8-byte WRAM loads and stores take one cycle, when the DRAM Processing Unit’s pipeline is full (i.e., with 11 or more tasklets).

Key Observations 4

The DRAM Processing Unit’s Main memory (MRAM) bank access latency increases linearly with the transfer size.

The maximum theoretical MRAM bandwidth is 2 bytes per cycle.

For data movement between the DRAM Processing Unit’s Main memory (MRAM) bank and the internal Working memory (WRAM), use large DMA transfer sizes when all the accessed data is going to be used.

For small transfers between the DRAM Processing Unit’s Main memory (MRAM) bank and the internal Working memory (WRAM), fetch more bytes than necessary within a 128-byte limit. Doing so increases the likelihood of finding data in WRAM for later accesses (i.e., the program can check whether the desired data is in WRAM before issuing a new MRAM access).

Choose the data transfer size between the DRAM Processing Unit’s Main memory (MRAM) bank and the internal Working memory (WRAM) based on the program’s WRAM usage, as it imposes a tradeoff between the sustained MRAM bandwidth and the number of tasklets that can run in the DRAM Processing Unit (which is dictated by the limited WRAM capacity).

Key Observations 5

*** When the access latency to a DRAM Processing Unit’s Main memory (MRAM) bank for a streaming benchmark (COPY-DMA, COPY, ADD) is larger than the pipeline latency (i.e., execution latency of arithmetic operations and WRAM accesses), the performance of the DRAM Processing Unit (DPU) saturates at a number of tasklets (i.e., software threads) smaller than 11. This is a memory-bound workload.

When the pipeline latency for a streaming benchmark (SCALE, TRIAD) is larger than the MRAM access latency, the performance of a DPU saturates at 11 tasklets. This is a compute-bound workload.

'ComputerScience > ProcessingInMemory' 카테고리의 다른 글

| [Pin] Encoding Memory Visualization (2) | 2024.10.22 |

|---|---|

| [Pin] CoreBPE Memory Tracing by pinatrace (4) | 2024.10.15 |

| [PIM] CPU/DPU Programming Code Review (5) | 2024.07.16 |

| [PIM] HEAM: Hashed Embedding Acceleration Using Processing-In-Memory (0) | 2024.06.25 |

| [PIM] Processing-in-memory: A workload-driven perspective (0) | 2024.05.21 |