2024. 10. 8. 00:57ㆍArtificialIntelligence/ECCV2024

ECCV 2024 Day2

Sometimes Less is More: The First Dataset Distillation Challenge

https://dd-challenge-main.vercel.app/#/

Dataset Distillation Challenge

dd-challenge-main.vercel.app

그래도 작년에 논문을 써봤던 분야라, 재미있게 들었습니다. :)

Dataset Distillation Workshop Introduction

1st An Introduction to Dataset Distillation

1st An Introduction to dataset distillation - Hakan Bilen

https://homepages.inf.ed.ac.uk/hbilen/

Hakan Bilen @ ed.ac.uk

Dr. Hakan Bilen Google Scholar GitHub School of Informatics University of Edinburgh 10 Crichton Street Edinburgh EH8 9AB NEWS / ACTIVITY July’23, Congrats to Arushi for ICCV and Wei-Hong for IJCV acceptances. Sept’22, NeurIPS paper accepted, congrats t

homepages.inf.ed.ac.uk

https://github.com/VICO-UoE/DatasetCondensation

GitHub - VICO-UoE/DatasetCondensation: Dataset Condensation (ICLR21 and ICML21)

Dataset Condensation (ICLR21 and ICML21). Contribute to VICO-UoE/DatasetCondensation development by creating an account on GitHub.

github.com

* Summary

매우 큰 규모의 이미지셋을 작게 condense 하는 것

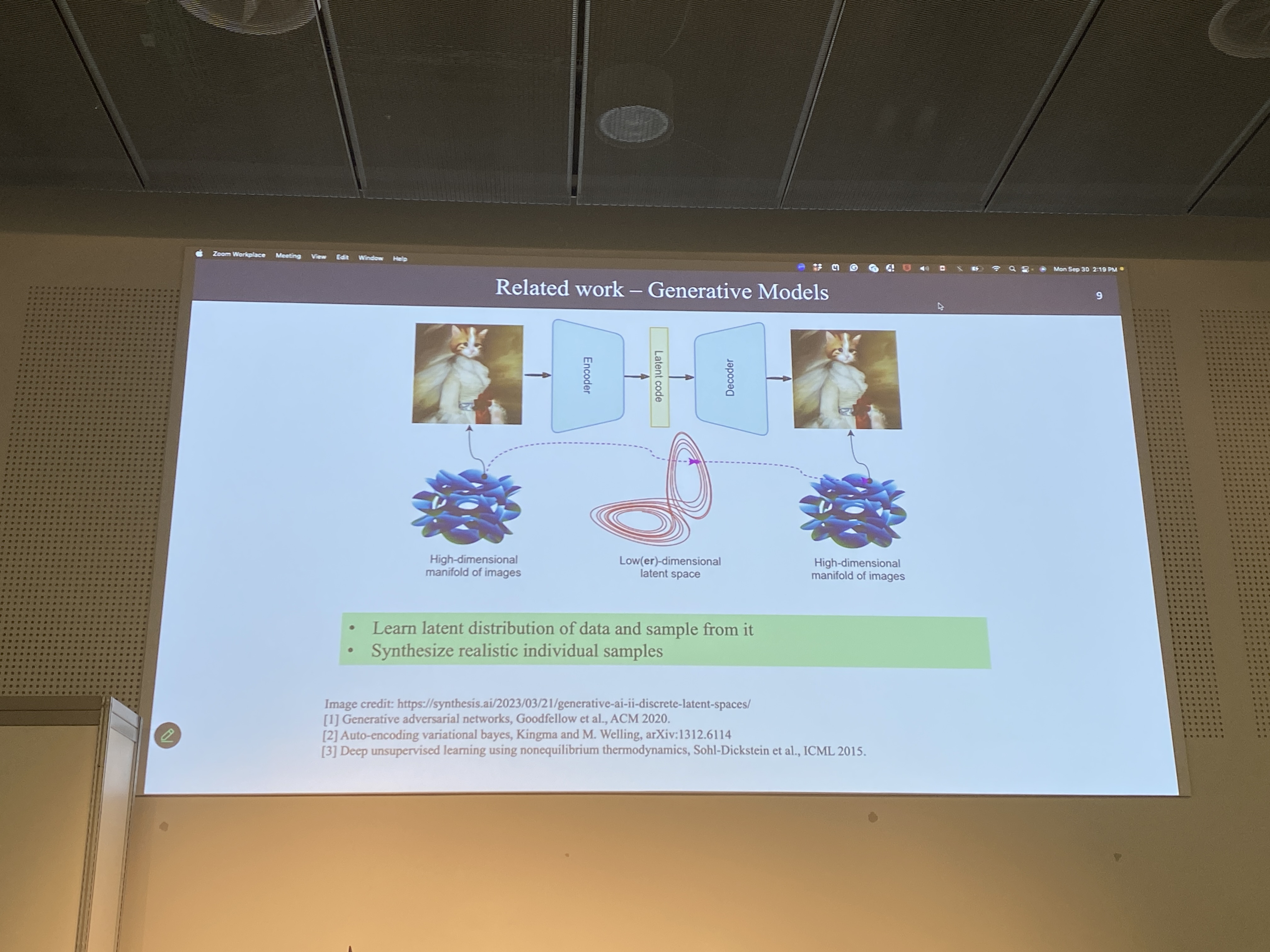

Generative model - systhetic dataset - useful for training

SOTA 모델들- compution 요구가 늘고 있다 (Heavy Deep Networks)

Training models -> OpenAI - 5 gigawatt datacenter (에너지도 많이 든다)

Data efficient training

Gradient update

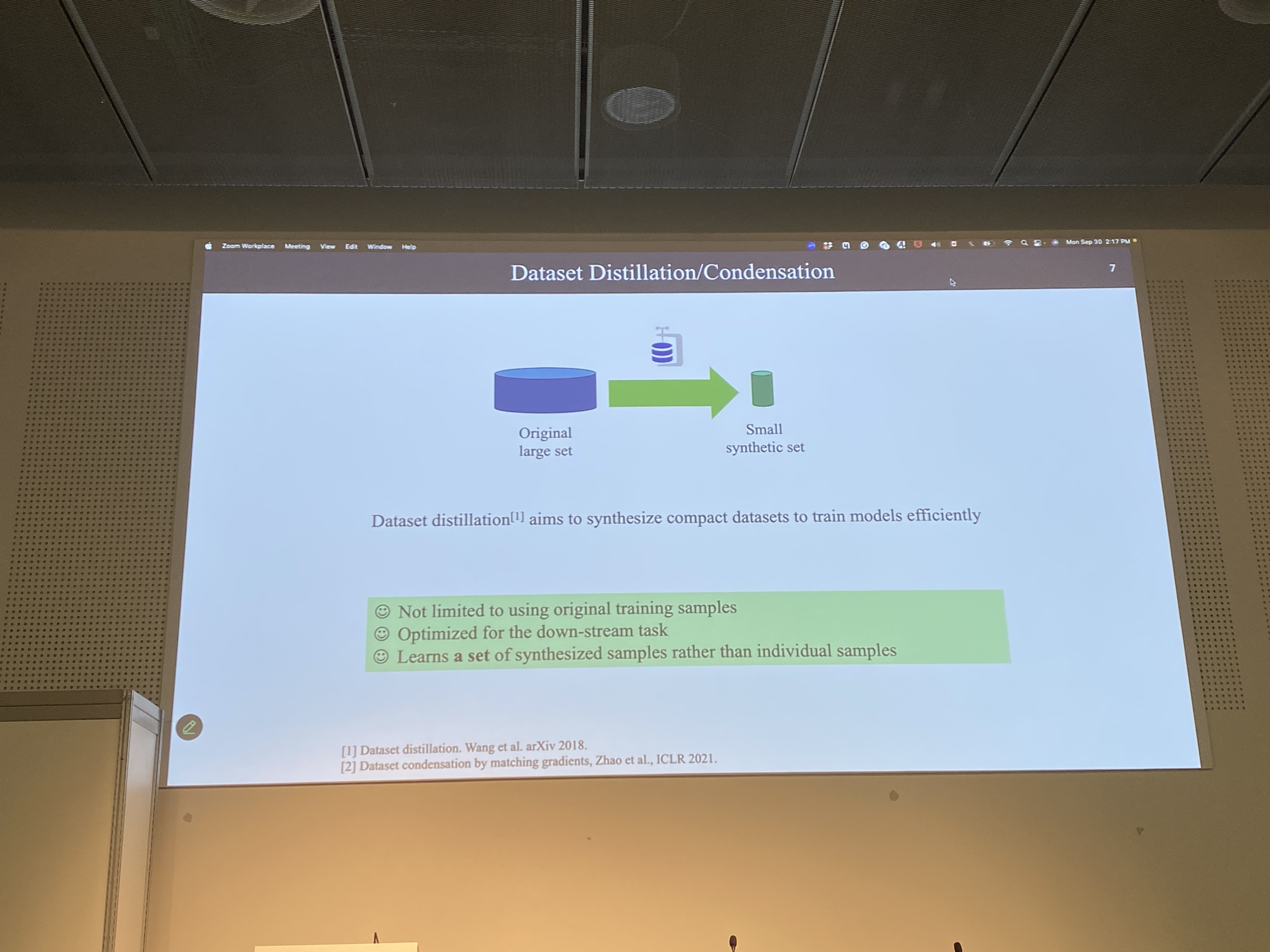

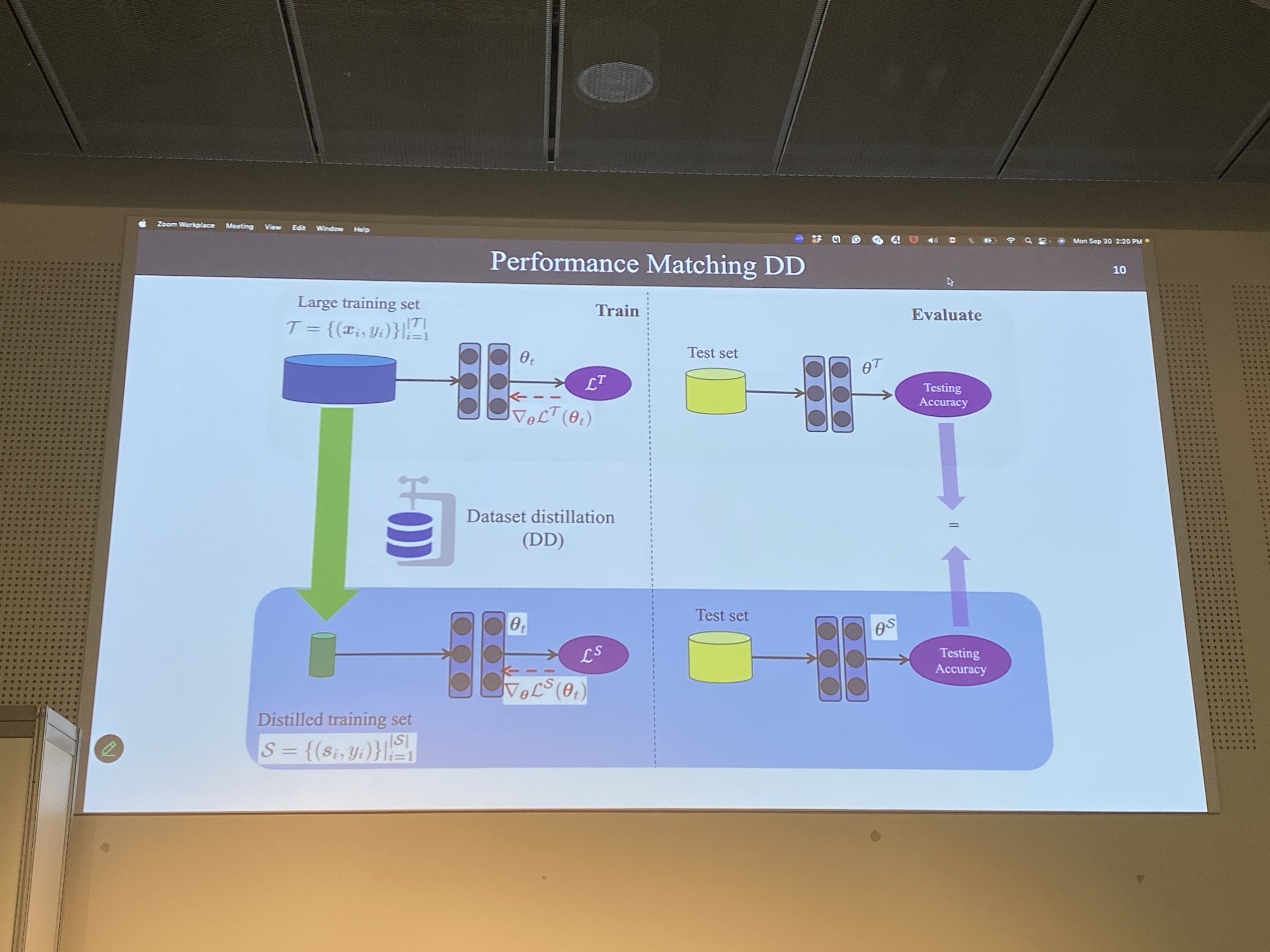

DD - 매우 큰 데이터 셋으로부터 작은 데이터셋을 합성하는 것

By loss function

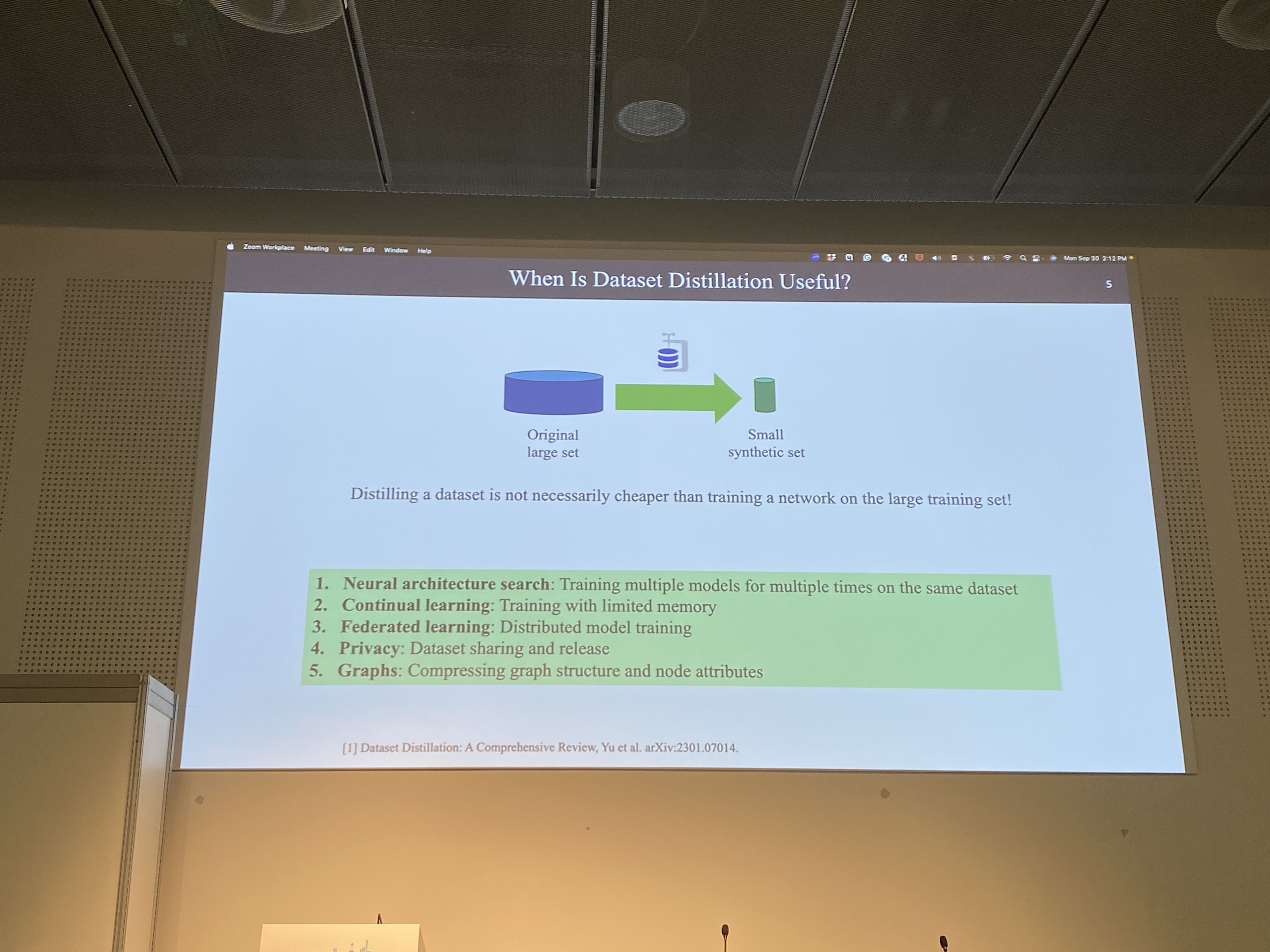

항상 데이터셋을 distillation하는게 올바른 것은 아님!

- 언제 DD가 유용한가?

- Limited memory 상황에서 DD가 유의미

- Coreset은 downstream task에 맞게 얻어지는게 아님

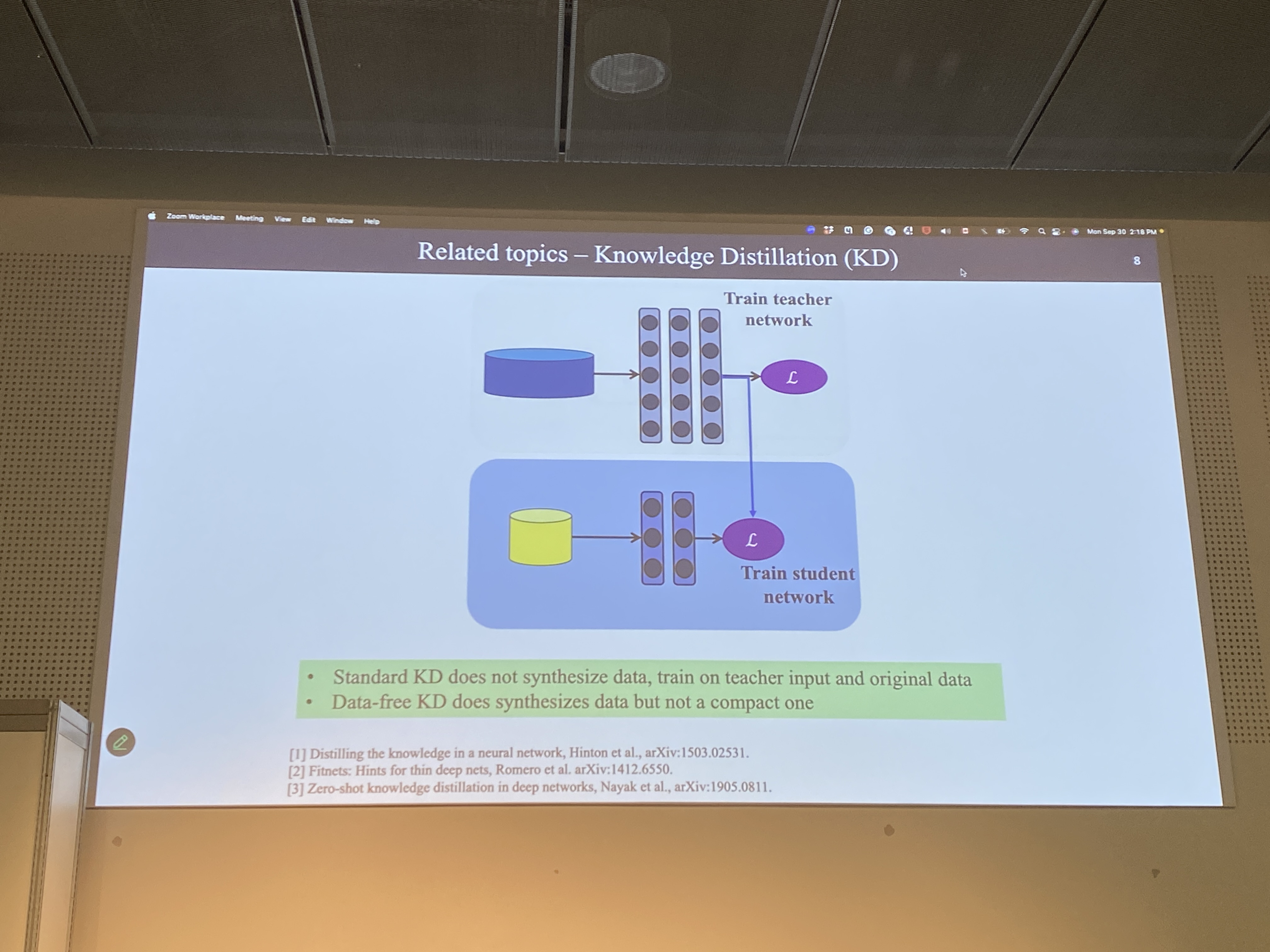

- Knowledge Distillation

- Teacher network -> student network

- 데이터 셋을 합성하는 것은 아니다. (넘겨주는 것 뿐)

- 생성 모델 -> 별개의 샘플을 생성하는 것 (사실적인 이미지)

- 데이터셋 디스틸레이션은 learning images (imformatic for training)

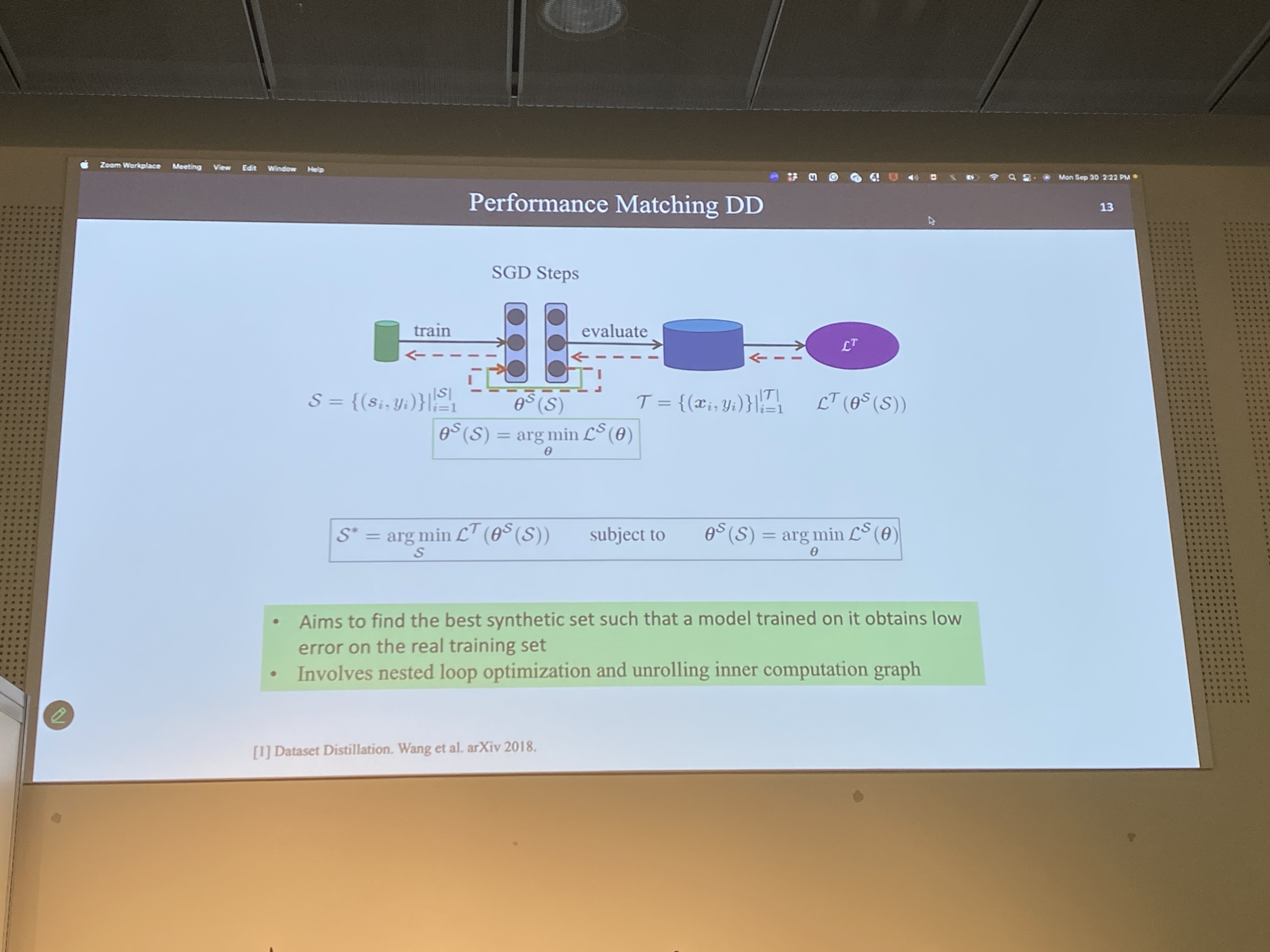

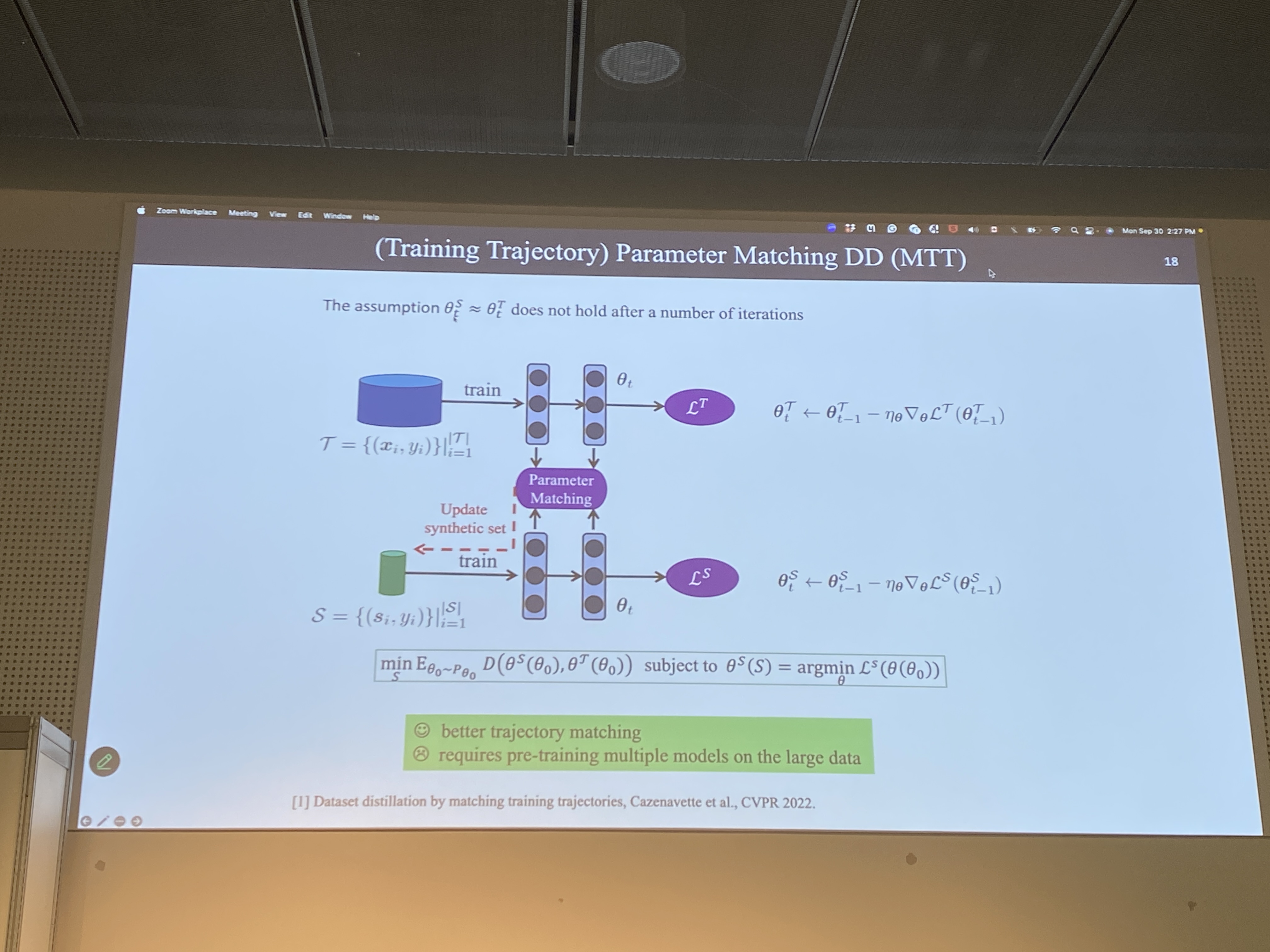

- Matching DD

- Similar path를 갖도록 하는 것

- Small synthetic set

- Compute error -> Loss func + gradient descent / update model - Back-propagate -> training dataset

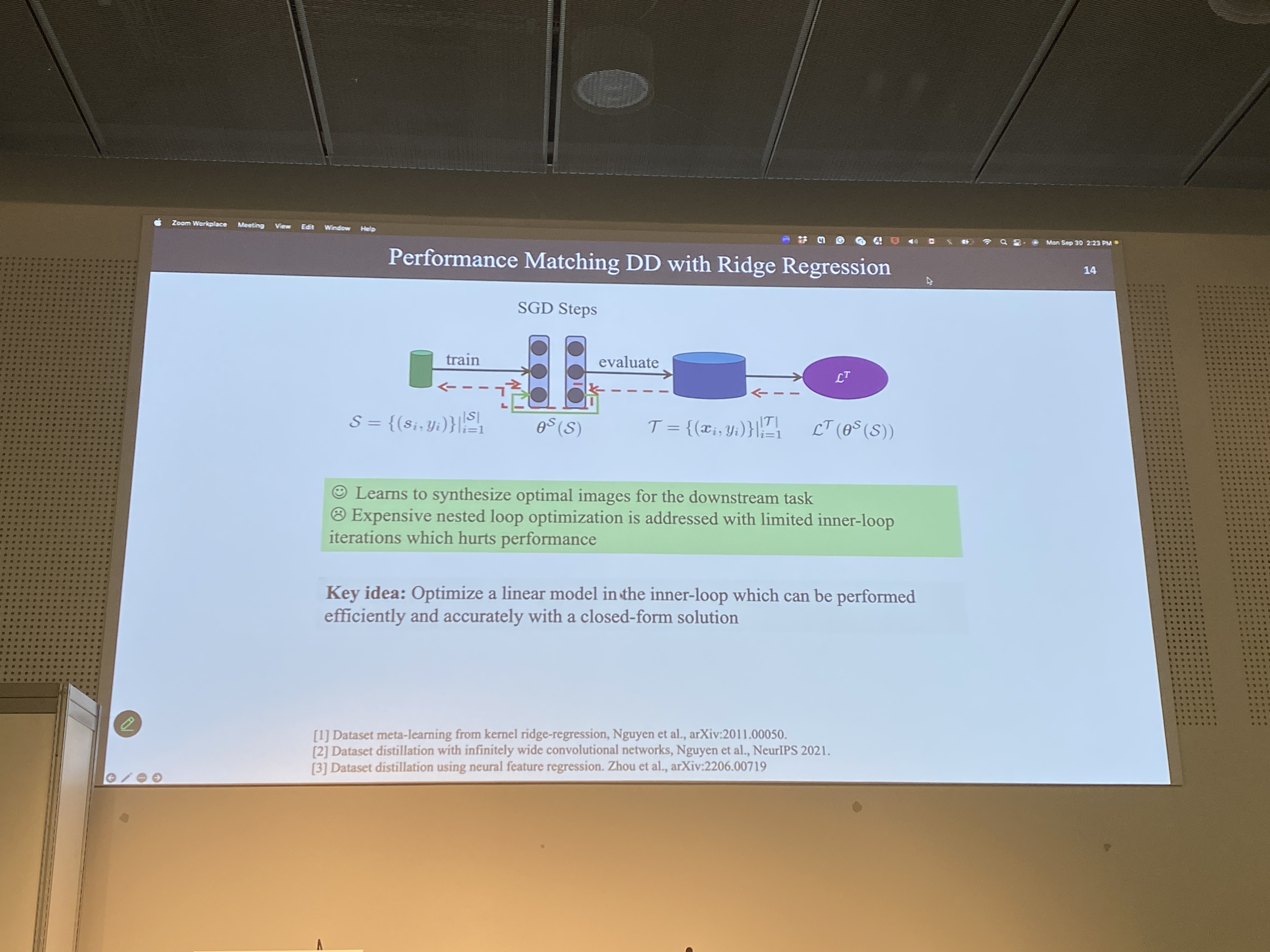

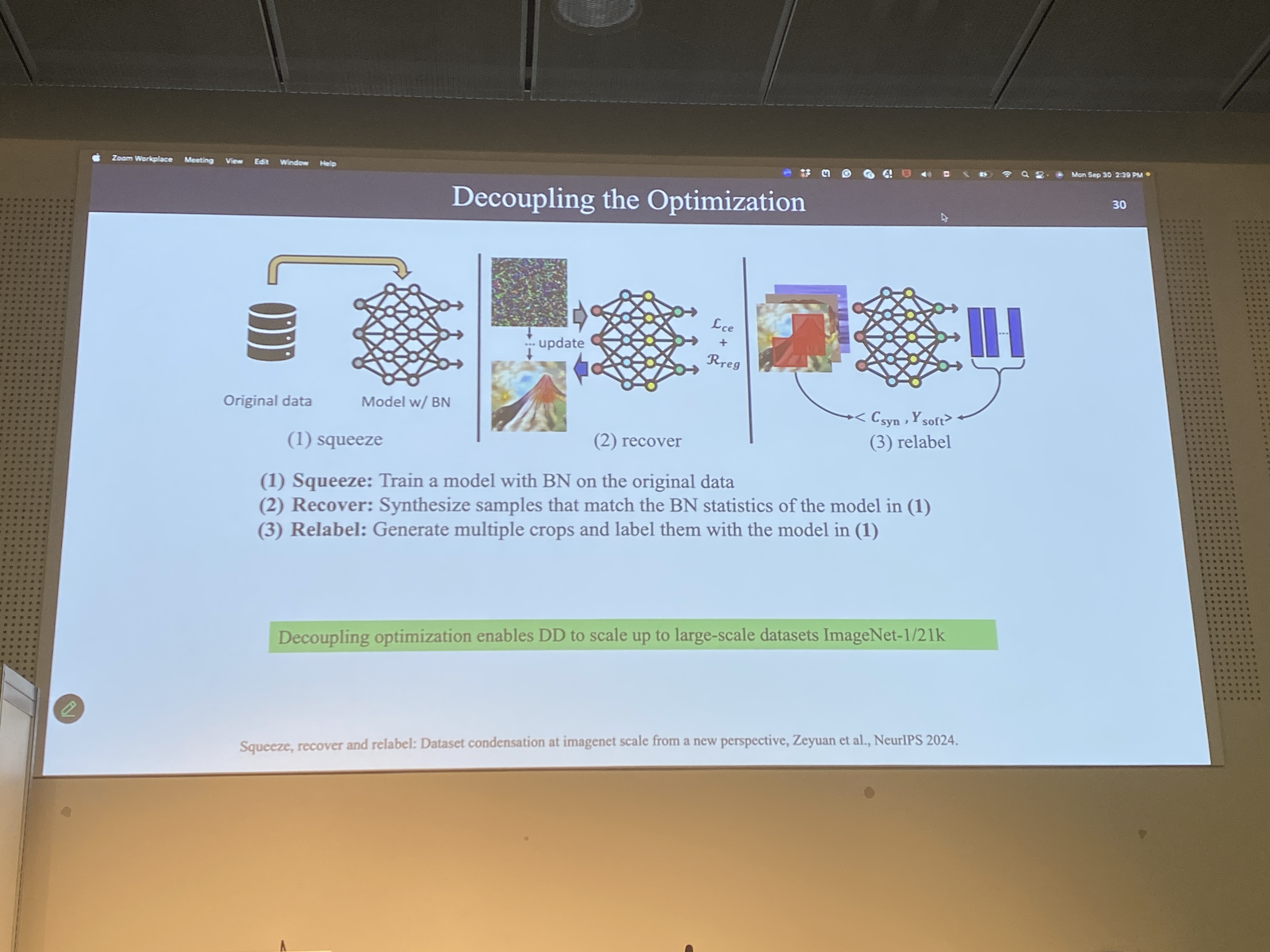

- Inner loop optimization을 매우 빠르게 하는 최근 경향

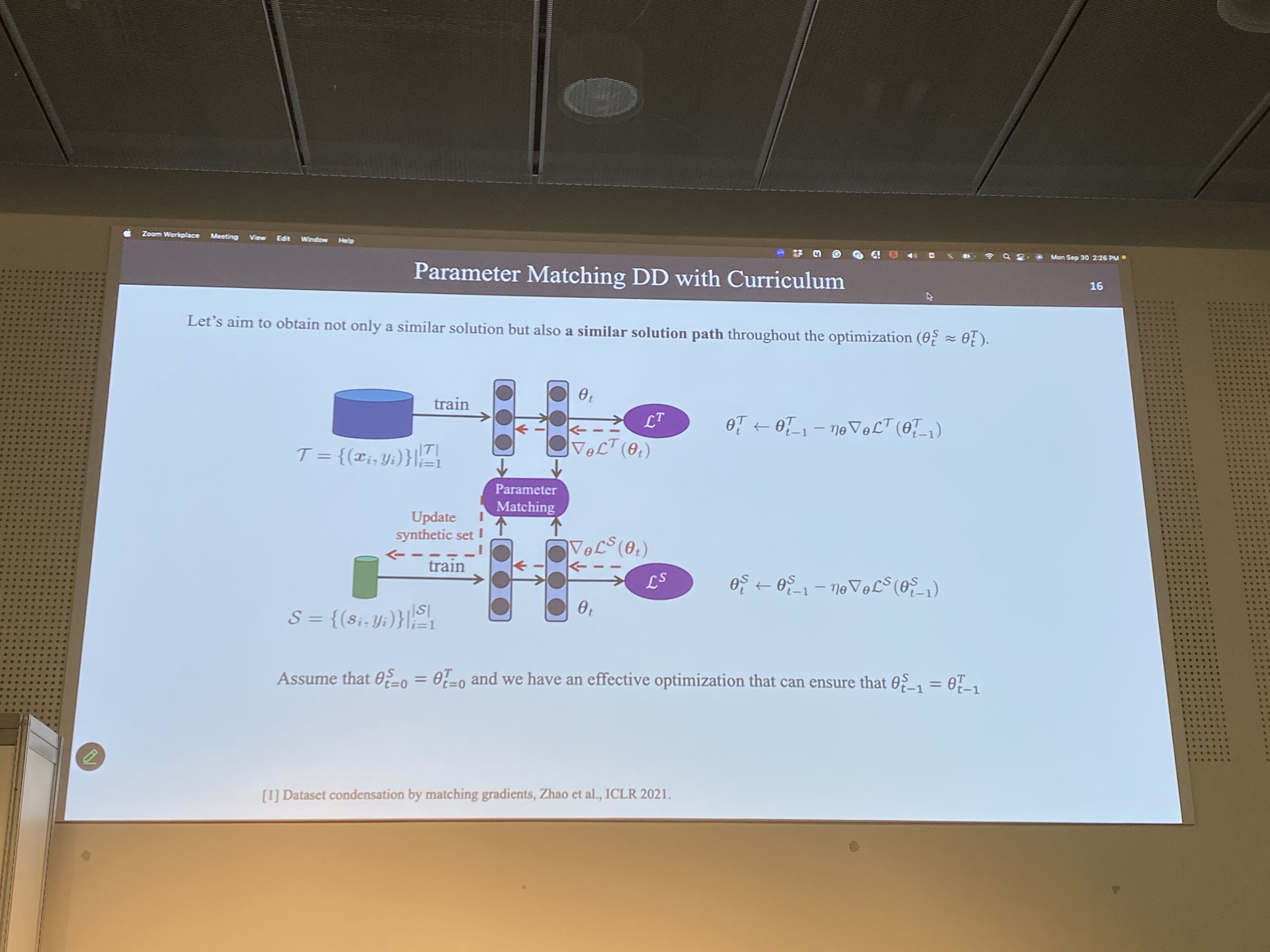

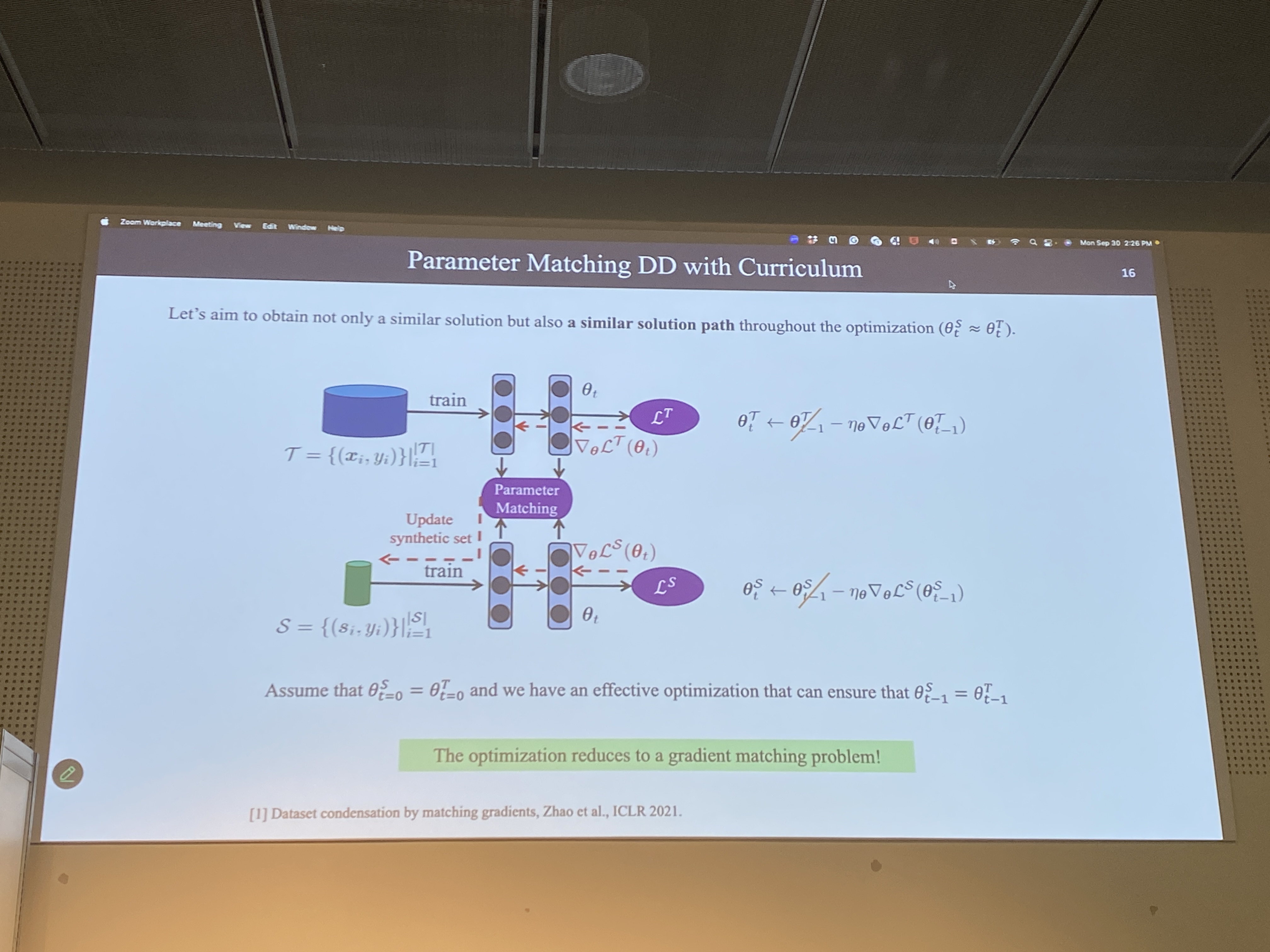

- Parameter matching DD

- Similar parameters -> similar performance 예상하는 것

- 내가 한 것! + 문제가 존재 (difficult to optimize)

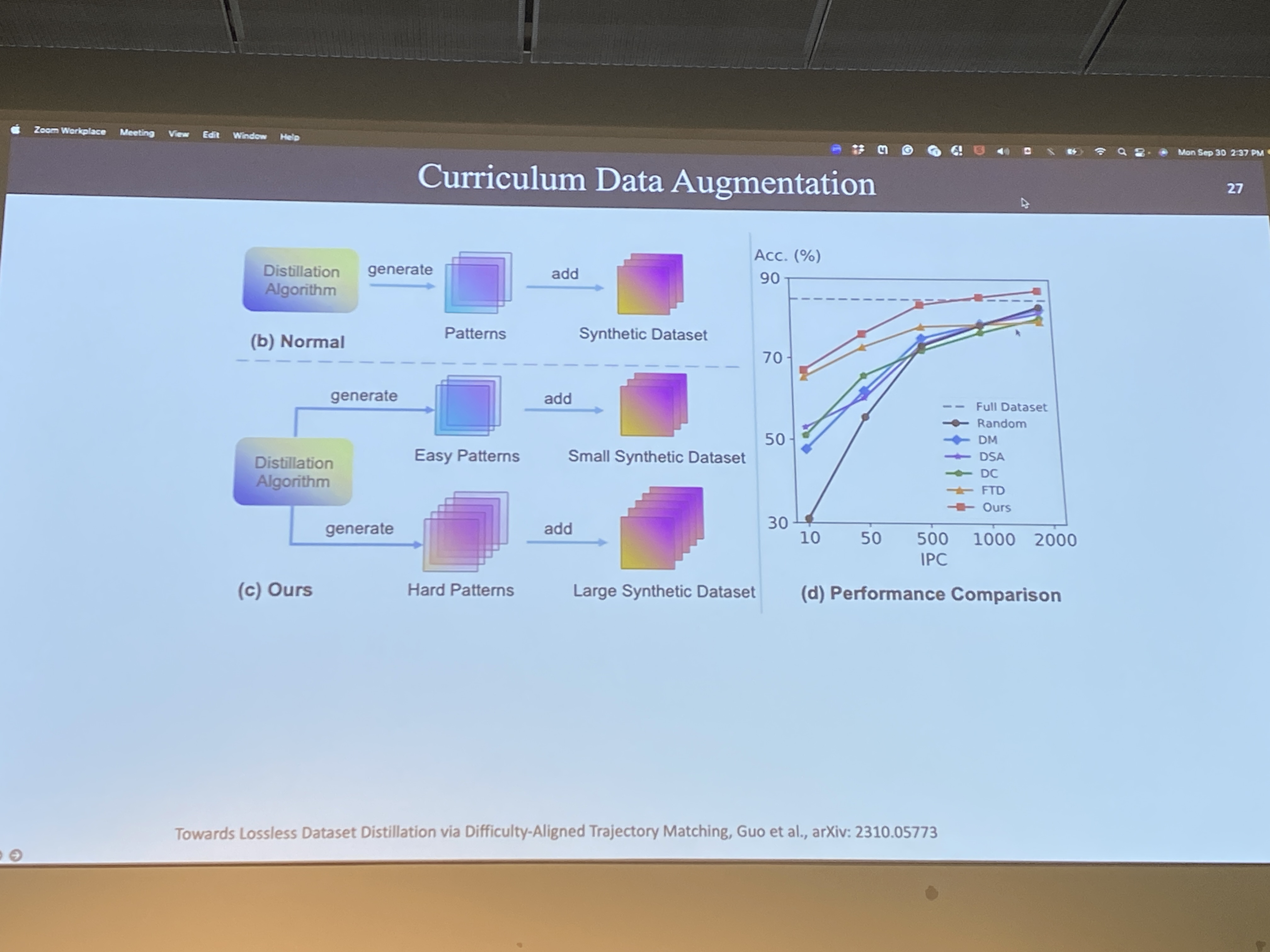

- curriculum 방식

- Each training step T

- Still expensive

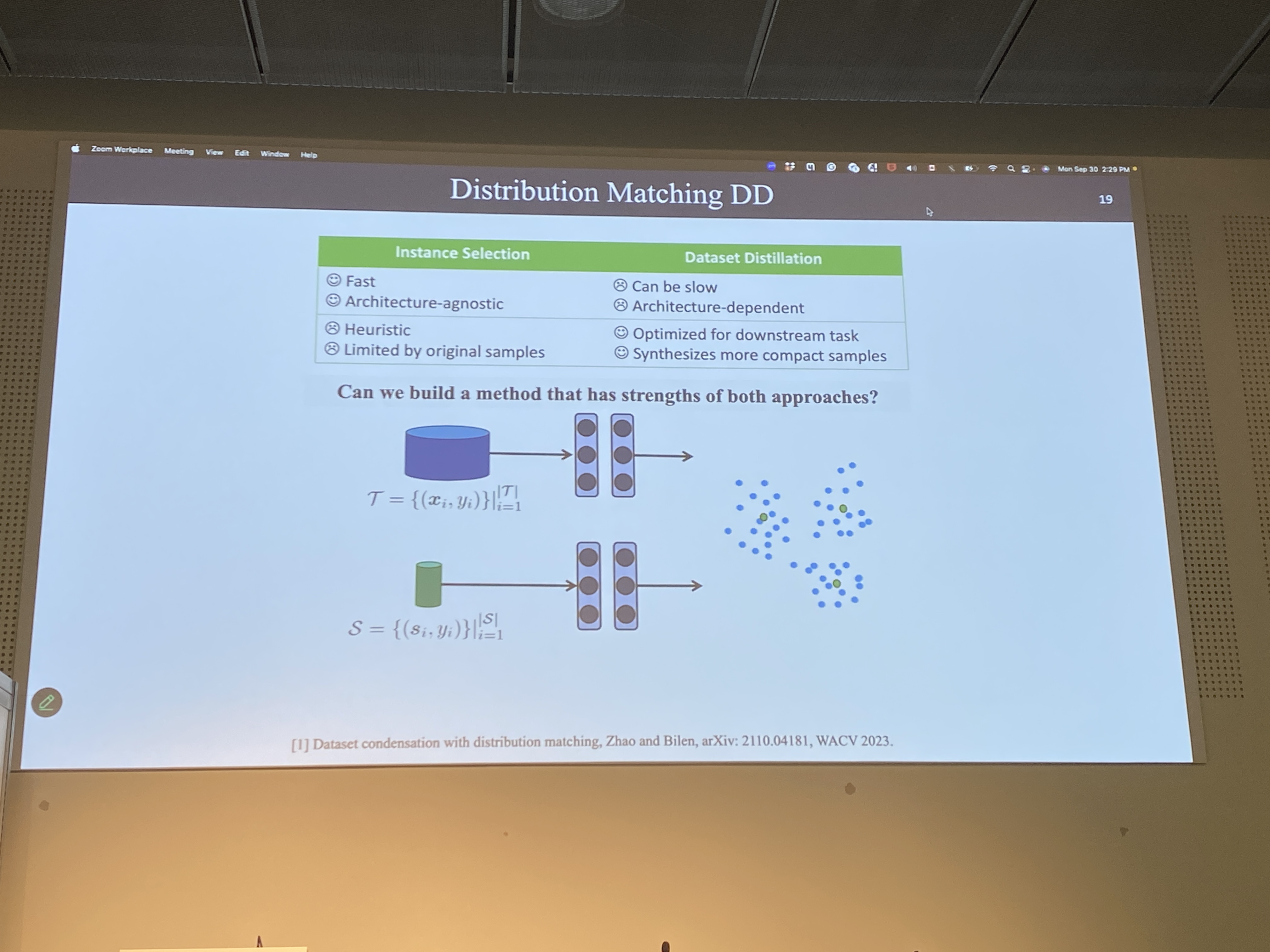

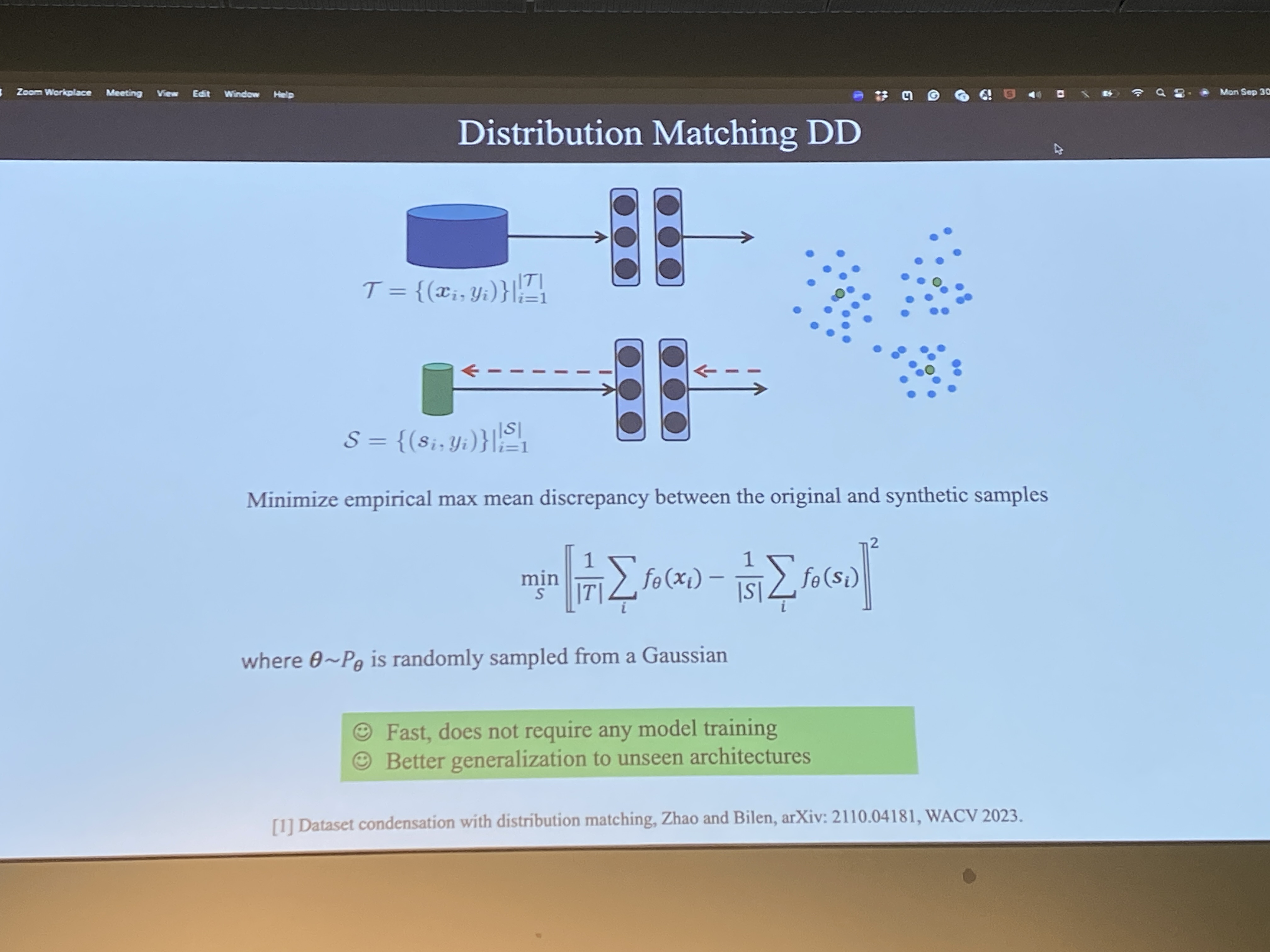

- Distribution Matching in DD / 이것도 읽었던 논문

- 그래프도 진짜 비슷하네 . . 다시 읽어봐야겠다.

- DD with GAN

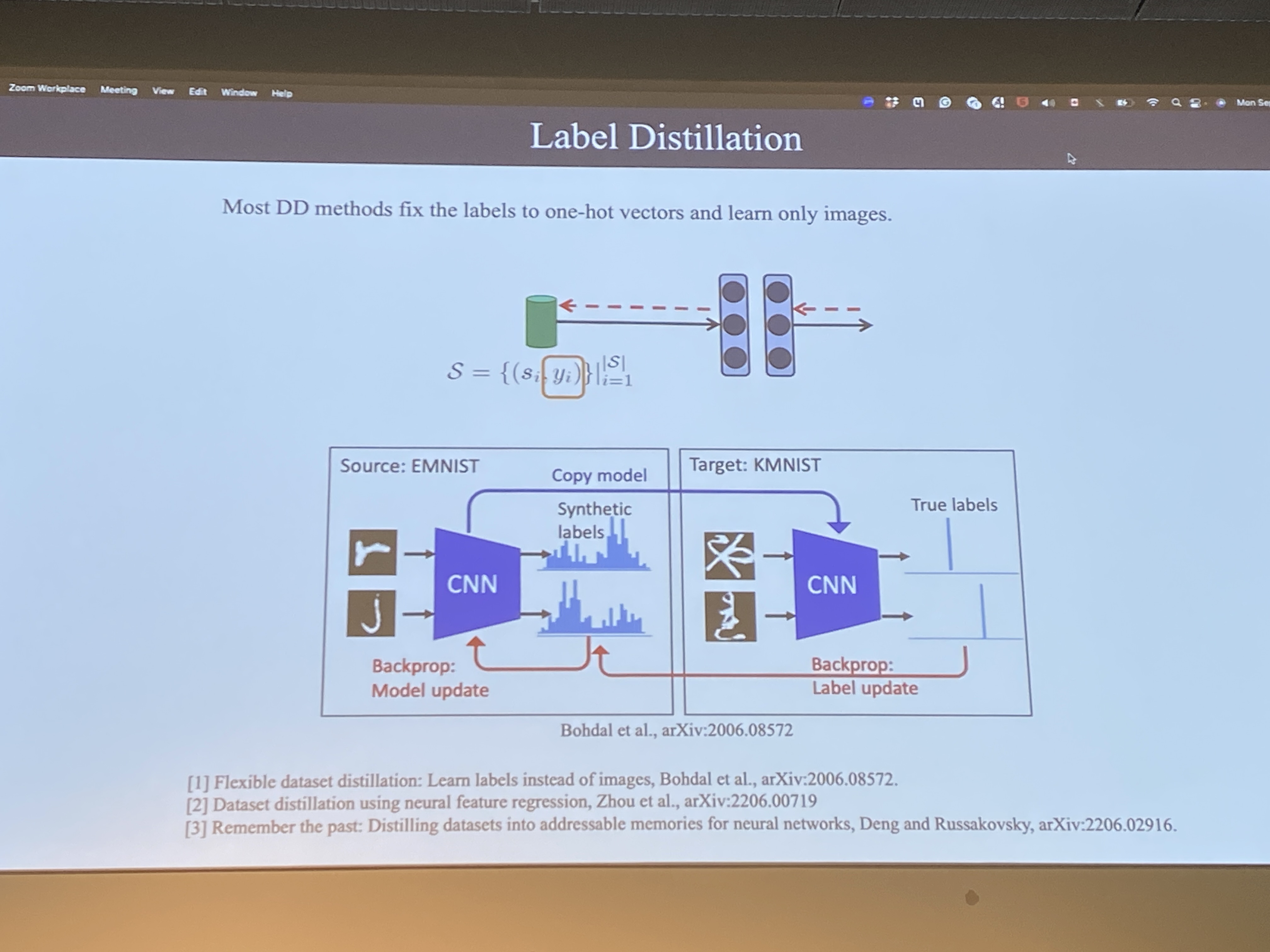

- Label distillation - 재미있어 보인다.!

- How to Parameter distillation?

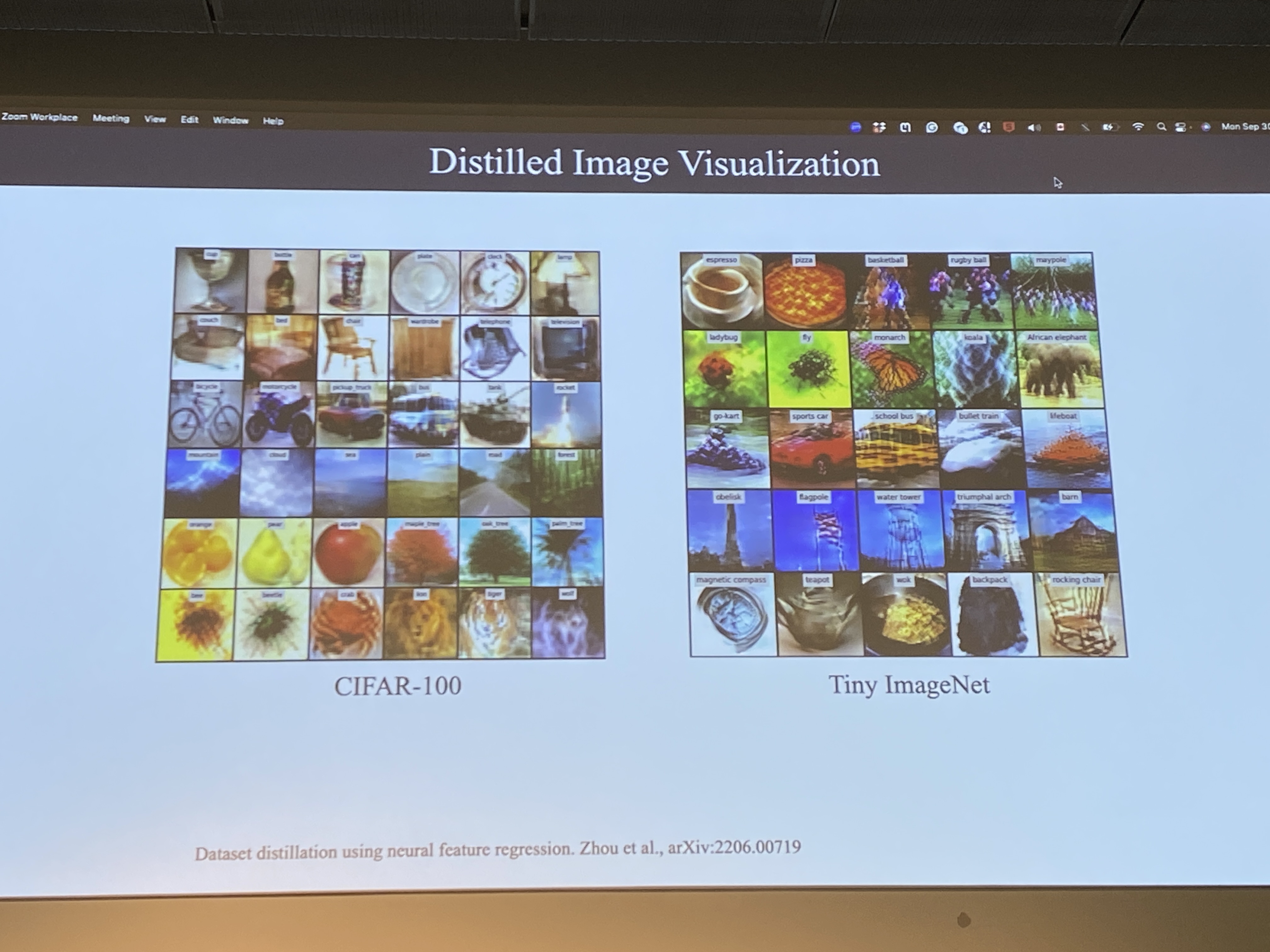

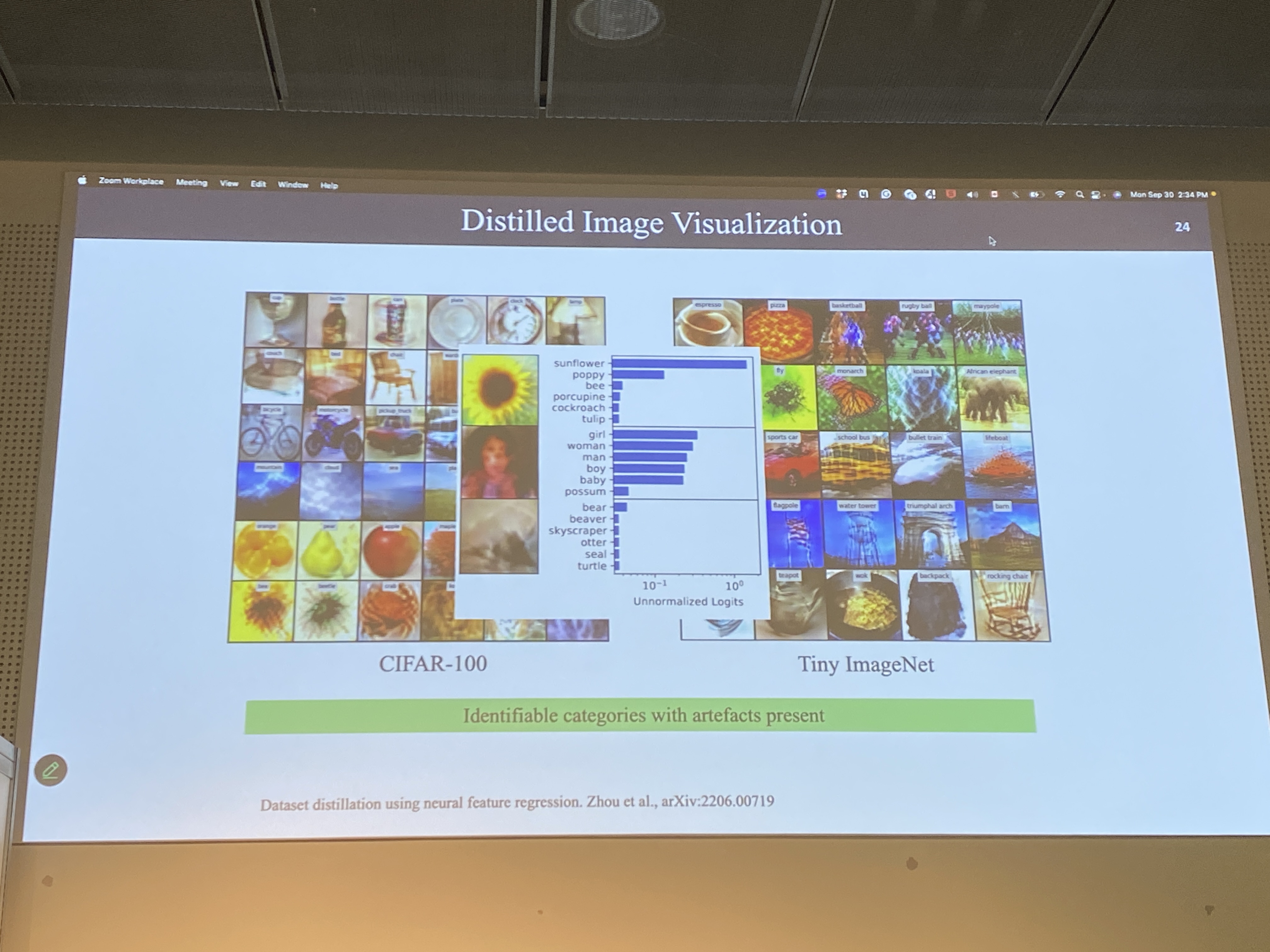

- Informative images

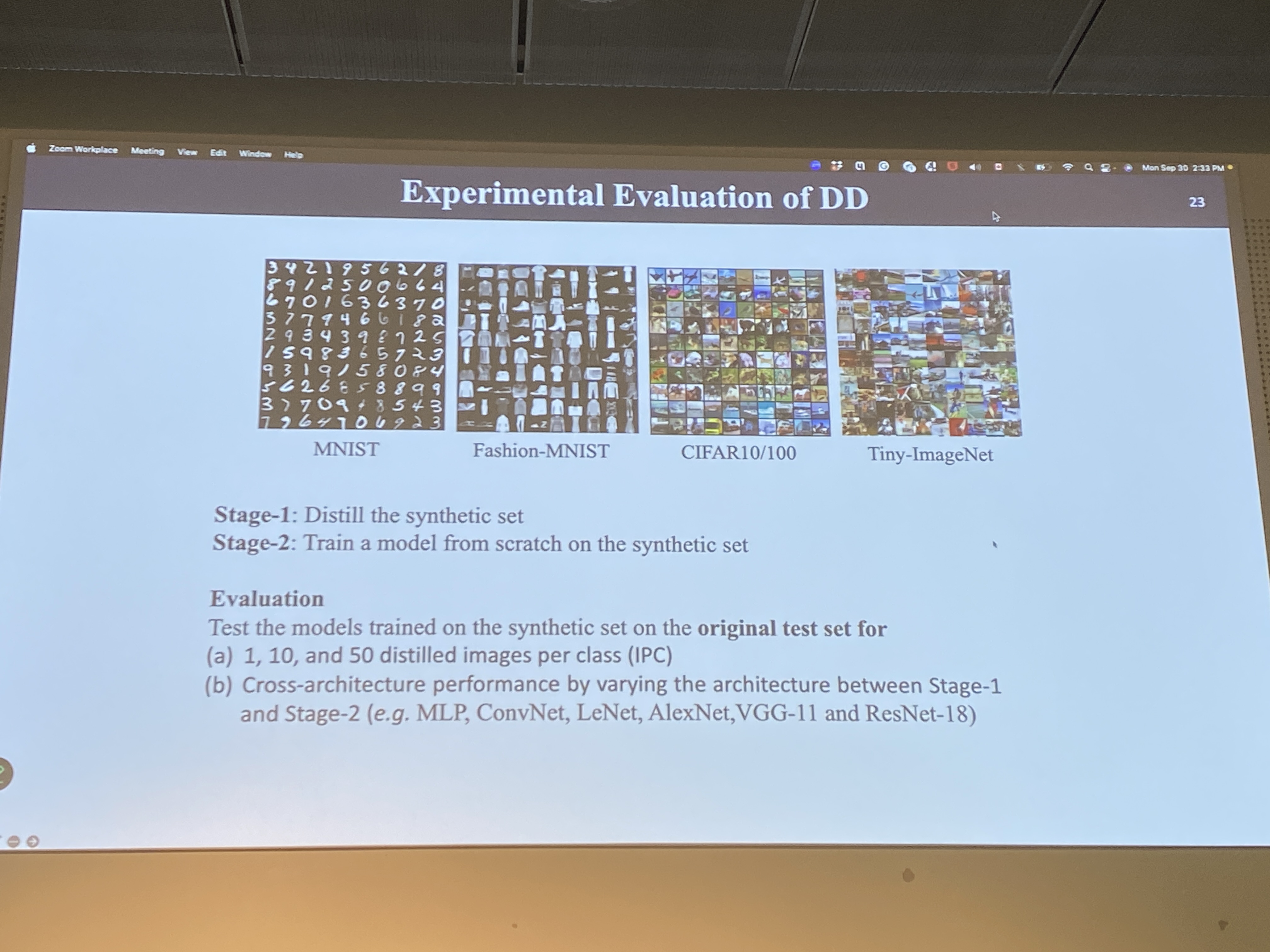

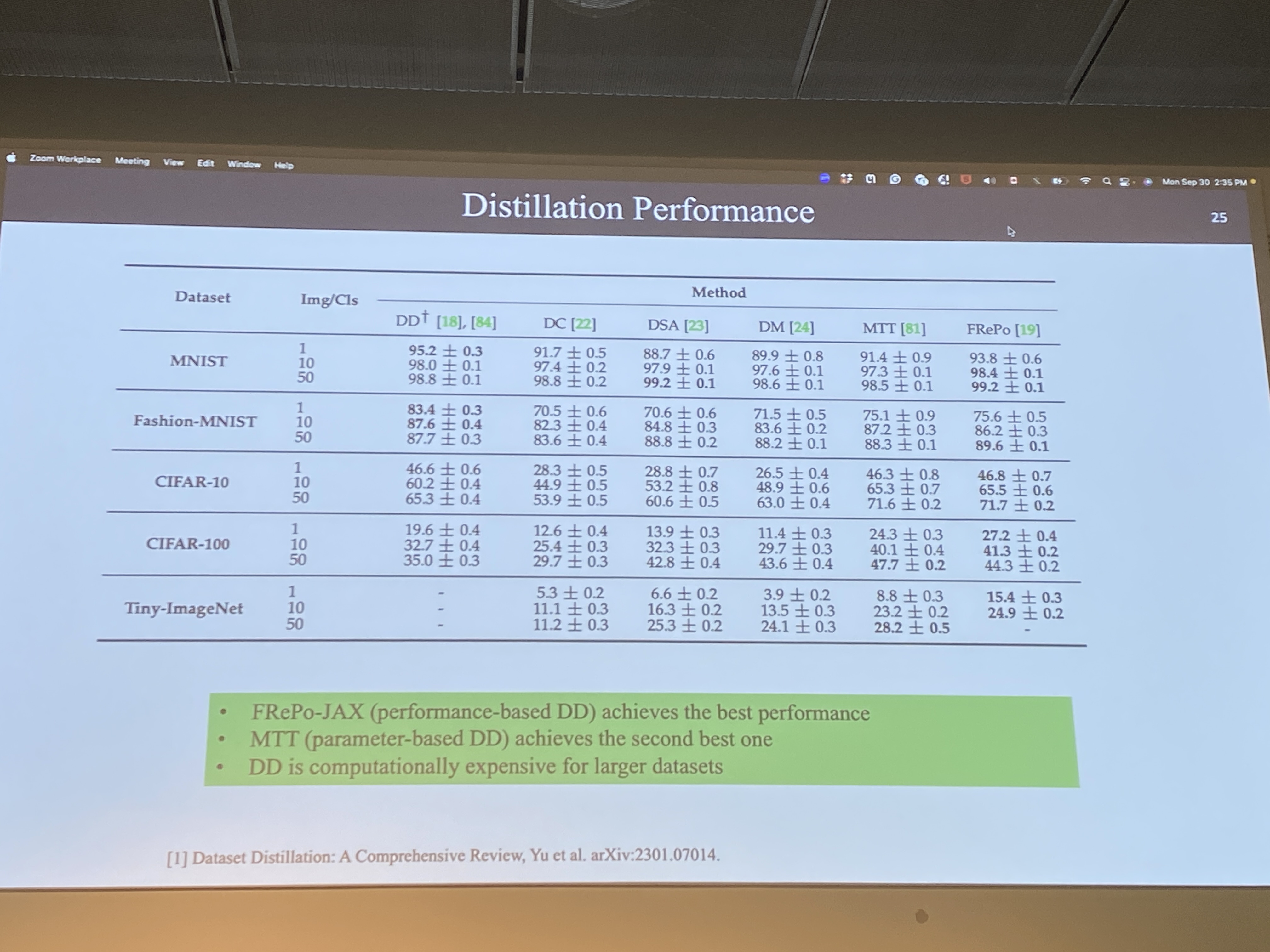

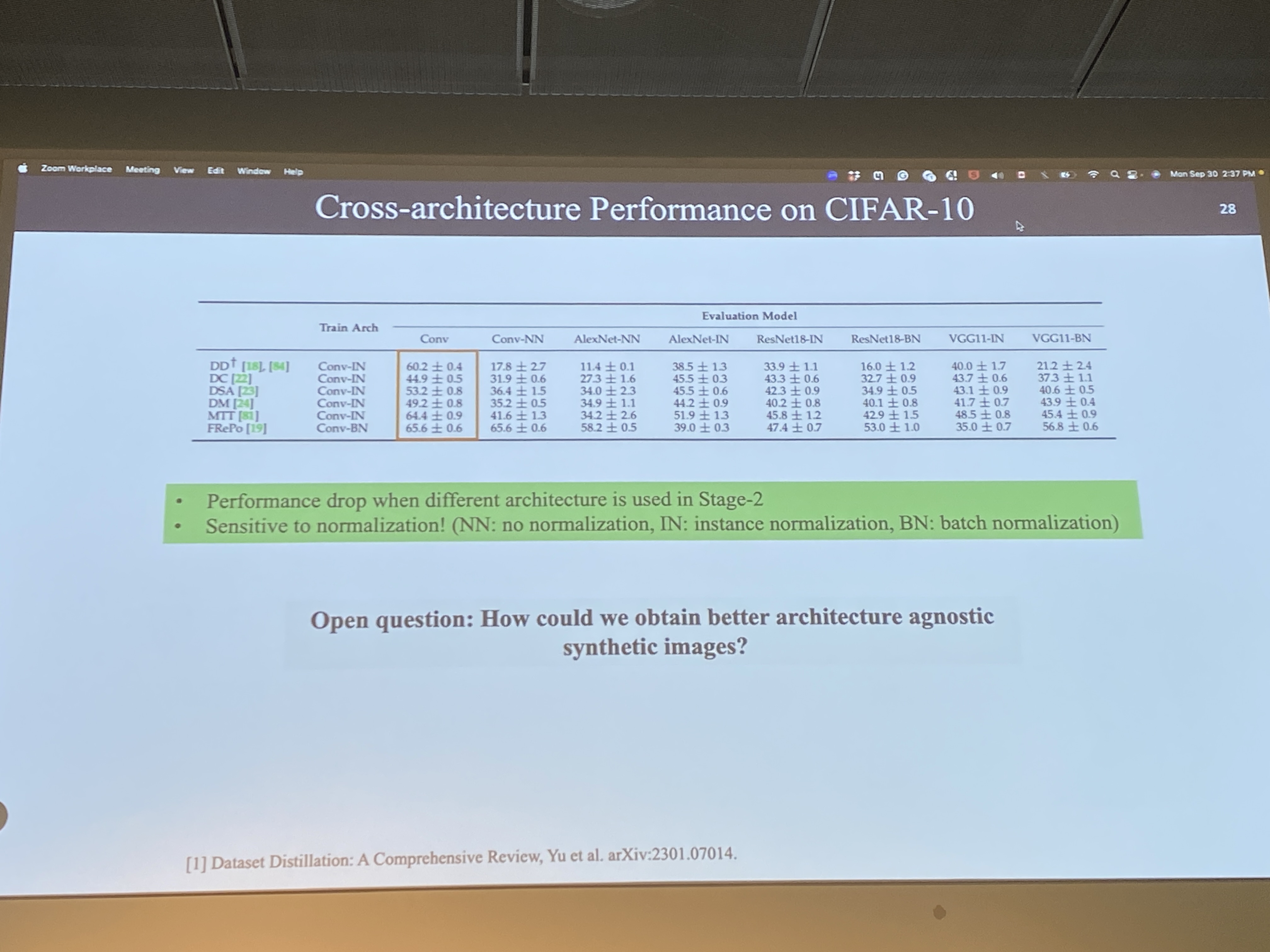

- Distillation performance 비교한 도표

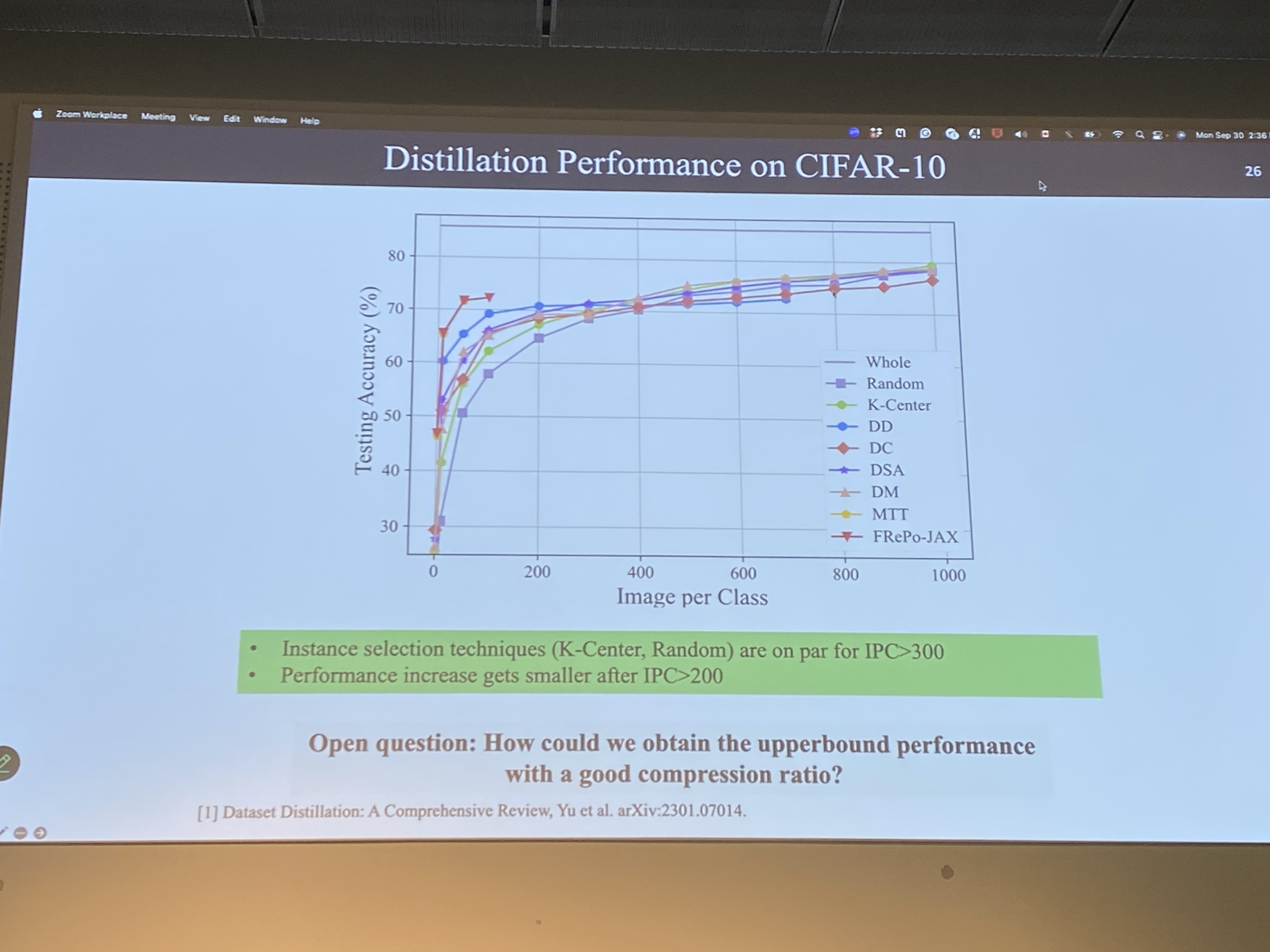

- IPC가 증가할수록 수렴하는 성능

- 아직 우리가 해결해야 할 문제가 남아있다 + another questions

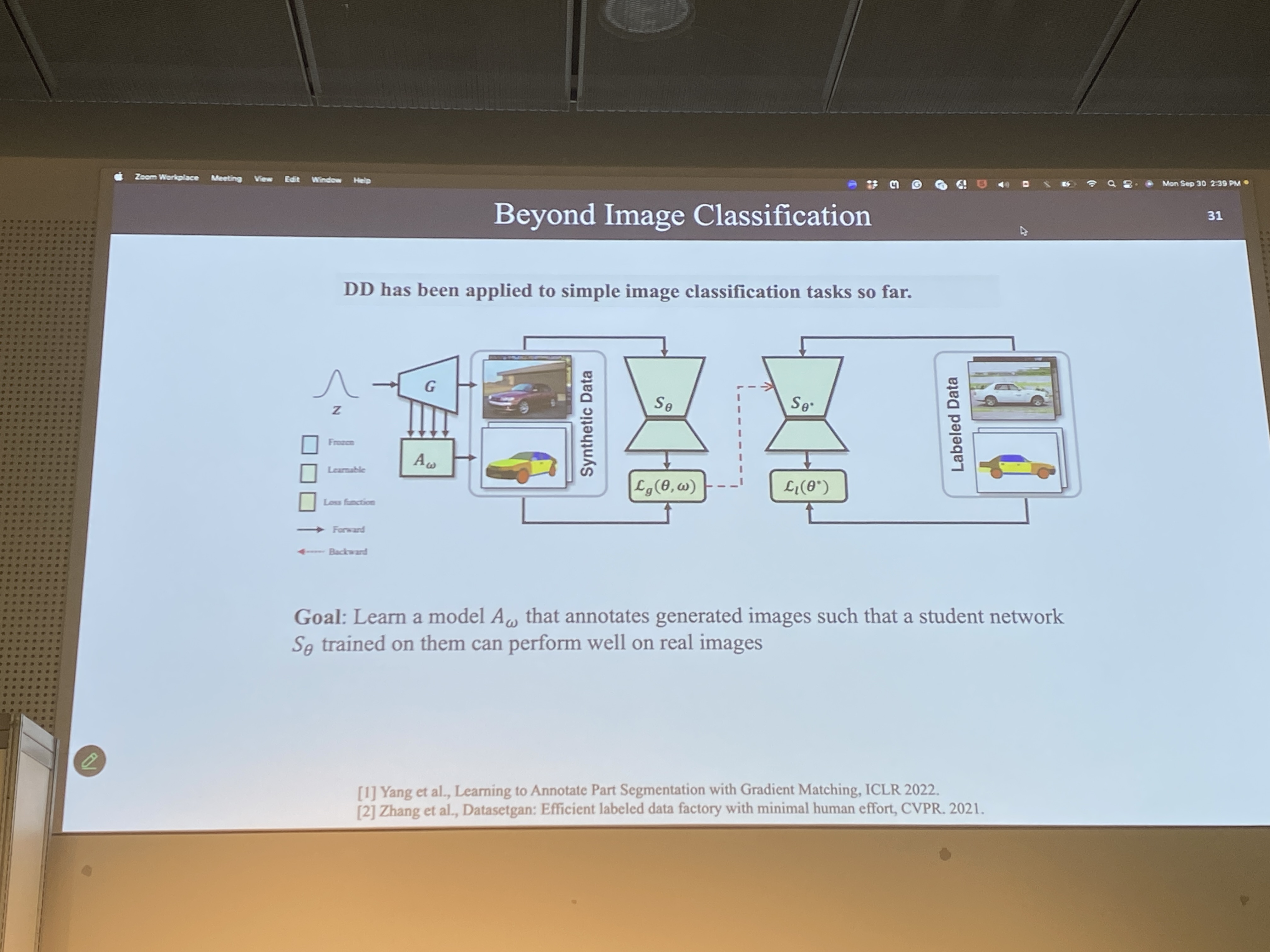

- Beyond image classification -> 현재는 분류에만 집중하는 중

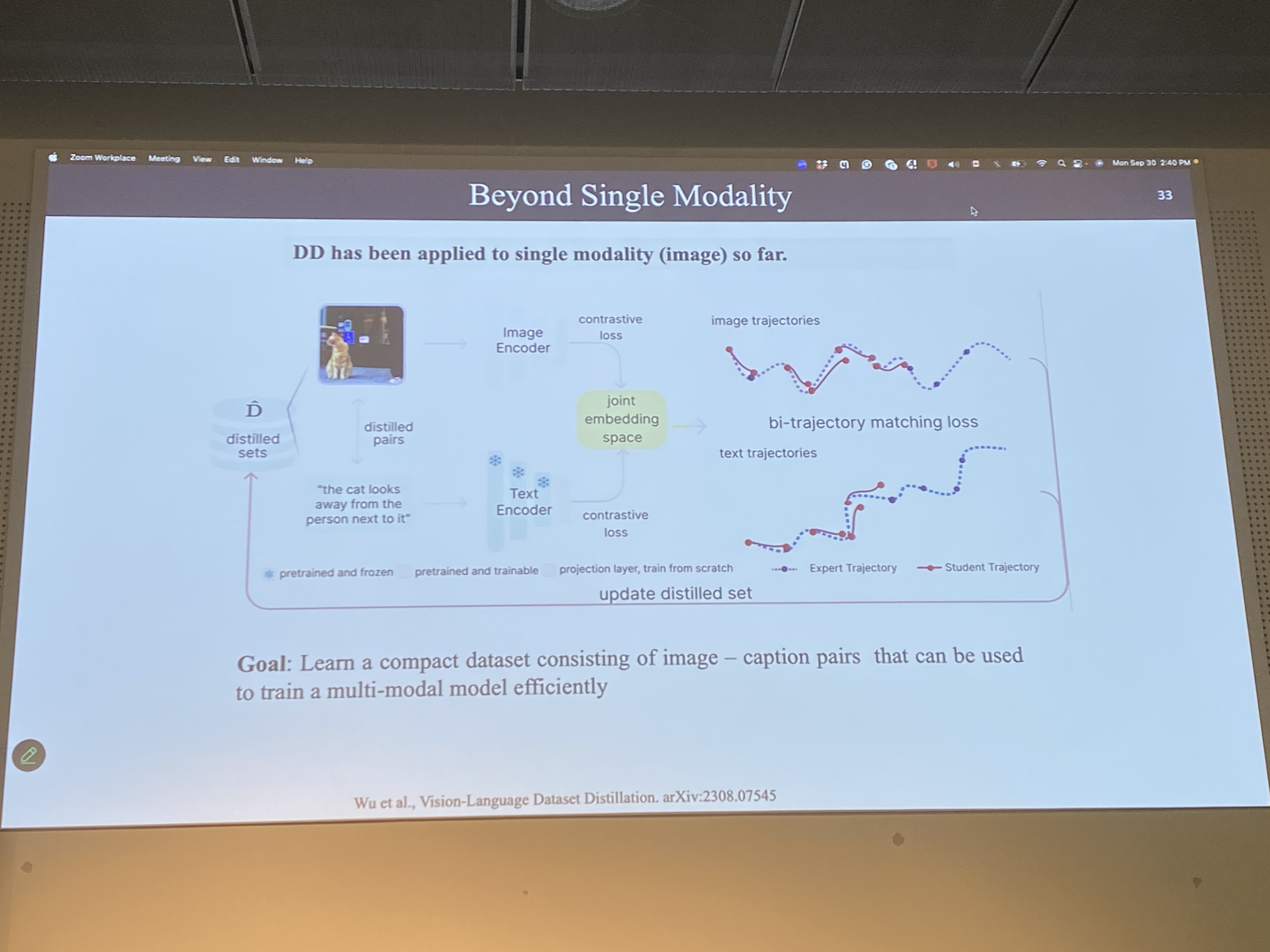

- Multi-modal models (vision-language task) 아직 가야할 길이 멀다.

- Images and some captions with that

'ArtificialIntelligence > ECCV2024' 카테고리의 다른 글

| ECCV 2024 DAY3 - Demo Session (7) | 2024.10.18 |

|---|---|

| ECCV 2024 DAY2 - Dataset Distillation Workshop (2) (2) | 2024.10.08 |

| ECCV 2024 DAY1 - Quantum Computer Vision (2) (2) | 2024.10.08 |

| ECCV 2024 DAY1 - Quantum Computer Vision (1) (0) | 2024.10.08 |

| ECCV 2024 DAY1 - Registration (0) | 2024.09.30 |